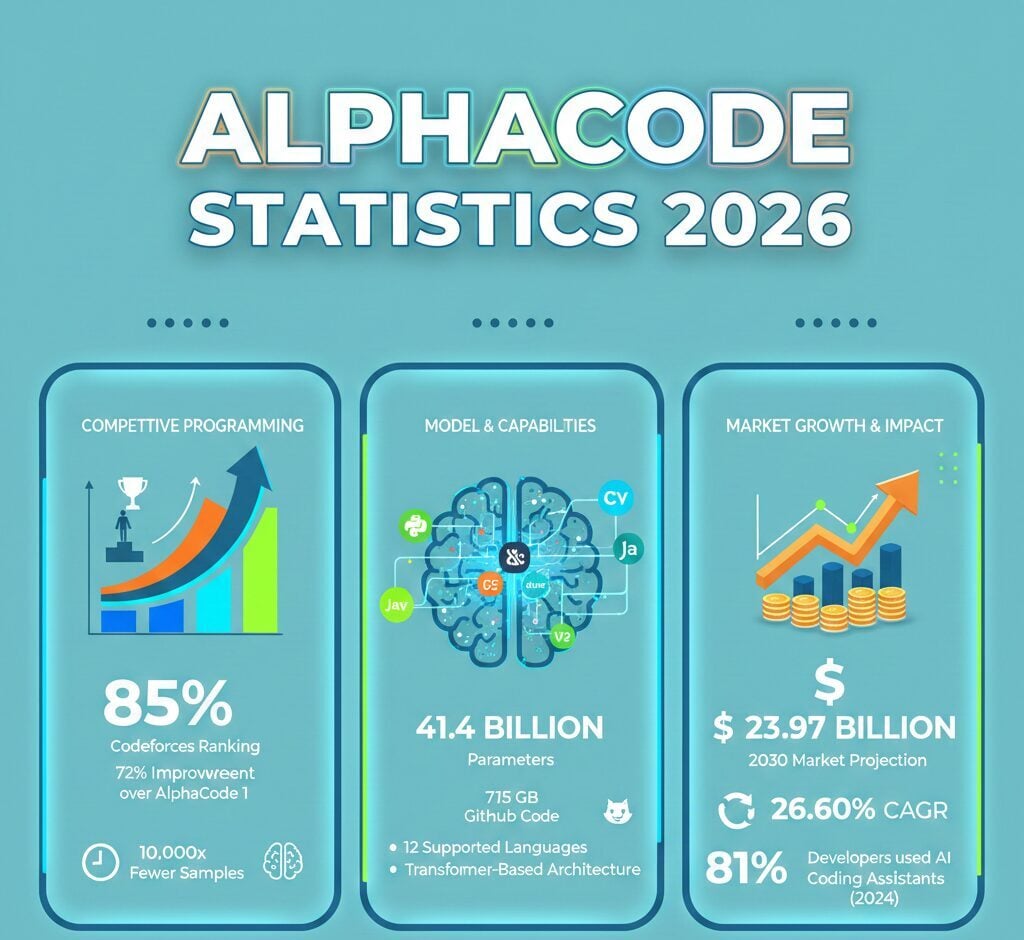

Google DeepMind’s AlphaCode 2 reached the 85th percentile on Codeforces competitive programming challenges, outperforming approximately 85% of human participants. The system achieved a 72% improvement over the original AlphaCode while requiring 10,000 times fewer computational samples.

AlphaCode represents the first AI system to achieve genuine competitive-level performance in algorithmic programming. The model architecture employs 41.4 billion parameters trained on 715 GB of GitHub code and uses transformer-based encoder-decoder design.

This article examines AlphaCode’s performance metrics, technical specifications, market impact, and competitive positioning based on verified data from Google DeepMind and industry research.

AlphaCode Statistics: Key Findings

- AlphaCode 2 achieved 85th percentile ranking on Codeforces, placing it between Expert and Candidate Master categories as of 2026

- The system solved 43% of CodeContests problems within 10 attempts, compared to 34.2% for the original AlphaCode

- AlphaCode generates up to 1 million candidate solutions per problem before filtering to 10 final submissions through clustering algorithms

- AI code tools market reached $7.37 billion in 2024 and projects to $23.97 billion by 2030 at 26.60% CAGR

- AlphaCode 2 demonstrates 10,000x greater sample efficiency, solving problems with roughly 100 samples versus 1 million for the original version

AlphaCode Model Architecture and Technical Scale

AlphaCode operates on a transformer-based encoder-decoder architecture distinct from decoder-only models like OpenAI’s Codex. The system supports 12 programming languages including Python, C++, Java, and JavaScript.

The production system combines 9 billion and 41.4 billion parameter models. Multi-query attention implementation increased sampling speed by more than 10 times compared to standard attention mechanisms.

| Specification | Value |

|---|---|

| Maximum Model Parameters | 41.4 billion |

| Pre-training Dataset Size | 715 GB (GitHub code) |

| Fine-tuning Dataset Size | 2.6 GB (CodeContests) |

| Supported Languages | 12 languages |

| Encoder Input Tokens | 1,536 tokens |

| Decoder Input Tokens | 768 tokens |

| Vocabulary Size | 8,000 (SentencePiece) |

The architecture includes five model variants ranging from 300 million to 41.4 billion parameters. This multi-scale approach enables the system to balance computational efficiency with problem-solving capability.

AlphaCode Competitive Programming Performance Metrics

AlphaCode’s performance evaluation occurred through simulated participation in Codeforces programming competitions. The system competed against over 5,000 participants in 10 contests for the original version and 8,000 participants across 12 contests for AlphaCode 2.

AlphaCode 2 reached 85th percentile ranking, while the original version achieved 54.3rd percentile. In optimal contest scenarios, AlphaCode 2 outperformed more than 99.5% of human participants.

| Performance Metric | AlphaCode Original | AlphaCode 2 |

|---|---|---|

| Codeforces Percentile | 54.3% | 85% |

| Competitors Outperformed | ~46% | ~85% |

| CodeContests Solve Rate | 34.2% | 43% |

| Peak Contest Performance | N/A | 99.5% percentile |

| Foundation Model | Custom Transformer | Gemini Pro |

The 72% improvement in problem-solving capability positions AlphaCode 2 as a competitive programmer at Expert level. The system demonstrates particular strength in dynamic programming problems that challenged earlier versions.

AlphaCode Sample Efficiency and Computational Requirements

AlphaCode’s methodology generates massive numbers of candidate solutions before filtering to final submissions. The original system produced up to 1 million samples per problem, narrowing these to 10 submissions through clustering algorithms.

AlphaCode 2 achieved dramatic efficiency gains through its Gemini Pro foundation. The system matches original AlphaCode performance with approximately 100 samples, representing a 10,000x efficiency improvement.

| Computational Metric | Value |

|---|---|

| Maximum Samples Generated | Up to 1 million |

| Final Submissions Per Problem | 10 (clustered) |

| AlphaCode 2 Efficiency Gain | 10,000x more efficient |

| AlphaCode 2 Samples Required | ~100 samples |

| False Positive Rate (CodeContests) | 4% |

The CodeContests dataset addressed quality issues in earlier benchmarks. Previous datasets like APPS and HumanEval showed false positive rates of 30-60%, while CodeContests achieved just 4% through temporal splitting and additional test generation.

AlphaCode vs Competing AI Code Generation Models

AlphaCode’s competitive programming focus differentiates it from general-purpose code assistants. The system demonstrates approximately 9x better percentile performance than GPT-4 on Codeforces benchmarks.

GPT-4 achieved a Codeforces rating of 392 points (Newbie level), while AlphaCode reaches Pupil to Expert classification. OpenAI Codex showed approximately 0% success rate on novel competitive programming problems.

| AI Model | Parameters | Codeforces Performance | Primary Strength |

|---|---|---|---|

| AlphaCode 2 | Gemini Pro-based | 85th percentile | Competitive programming |

| AlphaCode Original | 41.4B | 54.3rd percentile | Competitive programming |

| GPT-4 | Undisclosed | ~5th percentile | General code assistance |

| GPT-3.5 | 175B | ~3rd percentile | General code assistance |

| OpenAI Codex | 12B | ~0% (novel problems) | Code completion |

This performance gap highlights the distinction between specialized competitive programming systems and general-purpose language models. AlphaCode’s architecture optimizes for algorithmic reasoning rather than code completion.

AI Code Generation Market Statistics and Growth

The AI code tools market reached $7.37 billion in 2024 and projects to $23.97 billion by 2030, representing a 26.60% compound annual growth rate. Enterprise spending on AI coding tools captured $4.0 billion in 2025, accounting for 55% of total departmental AI investment.

Developer adoption reached critical mass with 81% of developers using AI coding assistants in 2024. Daily usage increased to 50% of developers by 2025, rising to 65% in top-quartile organizations.

| Market Metric | 2024 Value | 2030 Projection | CAGR |

|---|---|---|---|

| Global AI Code Tools Market | $7.37 billion | $23.97 billion | 26.60% |

| AI Code Assistant Market | $5.5 billion | $47.3 billion | 24% |

| AI Code Generation Market | $6.7 billion | $25.7 billion | 25.1% |

| Enterprise AI Coding Spend (2025) | $4.0 billion | N/A | N/A |

The coding category emerged as the largest segment in the application layer across the enterprise AI landscape. North America holds 38% market share, while cloud-based deployment accounts for 76.23% of implementations.

GitHub Copilot leads with approximately $800 million in annual recurring revenue. Anthropic’s Claude Code scaled from zero to $400 million ARR in five months during 2025, demonstrating rapid competitive dynamics.

AlphaCode Technical Innovations and Capabilities

AlphaCode introduced architectural and methodological innovations advancing AI code generation beyond autocomplete functionality. The system implements multi-query attention for 10x faster sampling speed compared to standard attention mechanisms.

Masked language modeling improved solve rates empirically. The GOLD offline reinforcement learning fine-tuning focuses training on highest-quality solutions rather than all generated code.

| Technical Innovation | Impact Measurement |

|---|---|

| Multi-Query Attention | 10x faster sampling speed |

| Masked Language Modeling | Improved solve rates |

| GOLD Fine-tuning | Focuses on highest-quality solutions |

| Dynamic Programming | First AI to properly implement DP strategies |

| Semantic Clustering | Reduces submission redundancy |

| Human Collaboration Mode | 90th+ percentile with guidance |

When programmers collaborate with AlphaCode 2 by specifying additional filtering properties, system performance increases beyond the 90th percentile. This human-AI collaborative approach demonstrates potential for AI as problem-solving partners rather than autonomous replacements.

AlphaCode 2’s success with dynamic programming problems, which challenged the original model, highlights improved algorithmic reasoning capabilities. The system properly implements and applies dynamic programming strategies for the first time in AI competitive programming.

AlphaCode Dataset and Training Infrastructure

DeepMind developed the CodeContests dataset specifically for training and evaluating competitive programming AI systems. The dataset contains approximately 15,000 programming problems with 30 million human code samples.

CodeContests sources problems from Codeforces, AtCoder, CodeChef, Aizu, and HackerEarth platforms. The training set includes 13,328 samples, with 117 validation samples and 165 test samples.

The dataset achieved a 4% false positive rate compared to 30-60% in previous benchmarks. This improvement resulted from temporal splitting where training data predates evaluation problems, additional generated tests, and filtering for problems with sufficient test coverage.

The CodeContests dataset spans approximately 3 GB in file size. This curated approach addresses significant quality issues present in earlier code generation benchmarks like APPS and HumanEval.

FAQ

What percentile did AlphaCode 2 achieve on Codeforces?

AlphaCode 2 achieved the 85th percentile on Codeforces competitive programming challenges, outperforming approximately 85% of human participants. In optimal contest scenarios, it reached the 99.5% percentile, placing it between Expert and Candidate Master categories.

How many parameters does AlphaCode have?

AlphaCode employs a maximum of 41.4 billion parameters in its largest model variant. The system includes five model variants ranging from 300 million to 41.4 billion parameters, with the production system combining 9B and 41B parameter models.

How efficient is AlphaCode 2 compared to the original?

AlphaCode 2 demonstrates 10,000x greater sample efficiency than the original version. It achieves equivalent performance with approximately 100 samples compared to 1 million samples required by the original AlphaCode, while showing a 72% improvement in problem-solving capability.

What is the AI code tools market size?

The global AI code tools market reached $7.37 billion in 2024 and projects to $23.97 billion by 2030 at a 26.60% CAGR. Enterprise AI coding spend captured $4.0 billion in 2025, representing 55% of total departmental AI investment.

How does AlphaCode compare to GPT-4 on competitive programming?

AlphaCode achieves approximately 9x better percentile performance than GPT-4 on Codeforces. GPT-4 reached the 5th percentile with a 392 rating (Newbie level), while AlphaCode 2 achieved the 85th percentile, demonstrating specialized competitive programming optimization over general-purpose models.