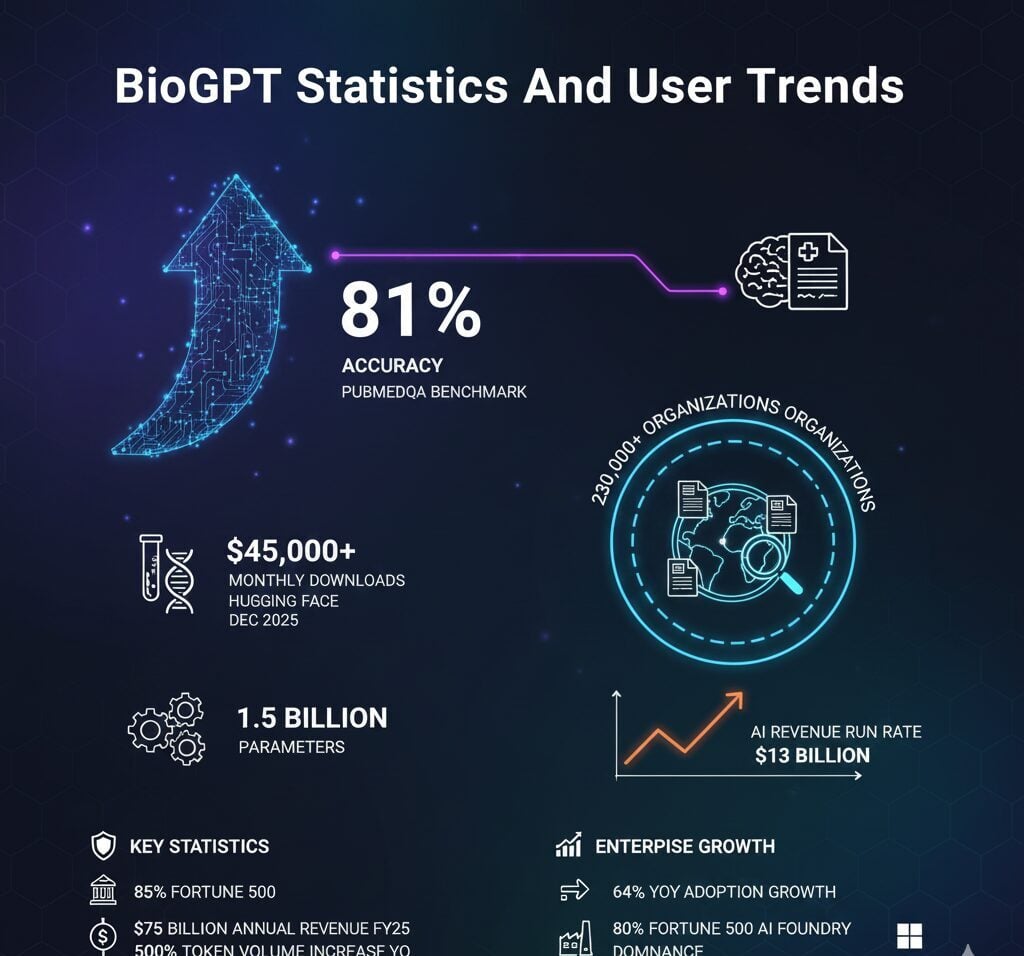

Microsoft’s BioGPT achieved 81% accuracy on PubMedQA benchmarks with its large variant, surpassing general-purpose models like Flan-PaLM (540 billion parameters) despite having only 1.5 billion parameters. The specialized biomedical language model recorded over 45,000 monthly downloads through Hugging Face as of December 2025.

Released under the MIT open-source license, BioGPT represents Microsoft Research’s focused approach to domain-specific AI development. The model trained exclusively on 15 million PubMed abstracts spanning from the 1960s through 2021, establishing new benchmarks in biomedical text generation and relation extraction tasks.

BioGPT Key Statistics

- BioGPT receives 45,315 monthly downloads on Hugging Face as of December 2025, demonstrating sustained adoption in research and development communities

- The BioGPT-Large model contains 1.5 billion parameters across 24 transformer layers, while the base version has 347 million parameters

- Microsoft trained BioGPT on 15 million PubMed abstracts representing decades of biomedical research literature from the 1960s through 2021

- BioGPT-Large reached 81% accuracy on PubMedQA question answering, exceeding Flan-PaLM at 79% and Meta’s Galactica at 77.6%

- The GitHub repository accumulated 4,500+ stars and 475 forks, with 63 fine-tuned variants available through Hugging Face

BioGPT Model Architecture and Parameters

Microsoft Research built BioGPT on the GPT-2 medium framework with biomedical-specific optimizations. The architecture incorporates 24 transformer layers, 1,024 hidden units, and 16 attention heads designed for pattern recognition in medical literature.

The base model operates with 347 million parameters while BioGPT-Large scales to 1.5 billion parameters. Both versions utilize a specialized vocabulary of 42,384 tokens generated through byte pair encoding on preprocessed biomedical corpus data.

| Specification | BioGPT Base | BioGPT-Large |

|---|---|---|

| Parameters | 347 Million | 1.5 Billion |

| Transformer Layers | 24 | 24 |

| Hidden Units | 1,024 | 1,024 |

| Attention Heads | 16 | 16 |

| Context Window | 2,048 Tokens | 2,048 Tokens |

The model supports a 2,048-token context window and operates under the MIT open-source license. Microsoft designed both variants to process biomedical text through identical architectural configurations, differing only in parameter count.

BioGPT Training Dataset Statistics

Microsoft trained BioGPT exclusively on biomedical literature rather than general web text. The pre-training corpus consisted of 15 million PubMed abstracts, each averaging 200 tokens in length.

The training process required 200,000 steps across eight NVIDIA V100 GPUs, completing in approximately ten days. Fine-tuning tasks utilized a single NVIDIA V100 GPU for specialized biomedical applications.

| Training Metric | Value |

|---|---|

| PubMed Abstracts | 15 Million |

| Average Tokens Per Abstract | 200 |

| Publication Date Range | 1960s to 2021 |

| Training Steps | 200,000 |

| Training Hardware | 8 NVIDIA V100 GPUs |

| Training Duration | ~10 Days |

The dataset provided comprehensive coverage of biomedical terminology, research methodologies, and domain knowledge accumulated over six decades. This focused training approach enabled BioGPT to develop specialized capabilities in medical text understanding and generation.

BioGPT Performance Benchmarks

BioGPT established state-of-the-art results across multiple biomedical NLP benchmarks. The model demonstrated particular strength in relation extraction and question answering tasks where domain expertise proves essential.

BioGPT-Large achieved 81% accuracy on PubMedQA, outperforming significantly larger models. The base version set new records on three end-to-end relation extraction benchmarks including BC5CDR, KD-DTI, and DDI datasets.

| Benchmark Task | Metric | BioGPT Score | BioGPT-Large Score |

|---|---|---|---|

| PubMedQA | Accuracy | 78.2% | 81.0% |

| BC5CDR | F1 Score | 44.98% | — |

| KD-DTI | F1 Score | 38.42% | — |

| DDI | F1 Score | 40.76% | — |

The results demonstrate that domain-specific training enables competitive performance with substantially fewer parameters than general-purpose alternatives. BioGPT-Large surpassed Flan-PaLM (540 billion parameters) at 79% and Meta’s Galactica (120 billion parameters) at 77.6% on PubMedQA.

BioGPT Adoption Metrics

The GitHub repository accumulated 4,500+ stars since its 2022 release, indicating strong interest from research and developer communities. The project recorded 475 forks and maintains 66 open issues reflecting ongoing engagement.

Nine contributors committed code across 50 total commits. The repository tracks 74 watchers and nine active pull requests as of December 2025.

BioGPT Hugging Face Integration

Hugging Face integration expanded BioGPT accessibility through standardized transformer library interfaces. The platform recorded 45,315 monthly downloads as of December 2025.

The community developed 63 fine-tuned model variants targeting specialized biomedical applications. Additionally, 85 Hugging Face Spaces incorporate BioGPT for interactive demonstrations.

| Platform Metric | Value |

|---|---|

| Monthly Downloads | 45,315 |

| Total Likes | 291 |

| Fine-tuned Variants | 63 |

| Hugging Face Spaces | 85 |

| Community Discussions | 28 |

The adoption metrics position BioGPT among the most actively utilized domain-specific language models in the biomedical AI ecosystem. Microsoft added the model to the Transformers library in December 2022.

BioGPT vs Other Biomedical Models

BioGPT operates within a competitive landscape of specialized biomedical language models. Each model optimizes for different task categories and architectural approaches.

Encoder-based models like BioBERT and PubMedBERT excel at discriminative tasks including named entity recognition and text classification. BioGPT’s decoder architecture enables generative capabilities absent in encoder-only models.

| Model | Architecture | Parameters | Primary Strength |

|---|---|---|---|

| BioGPT | GPT-2 (Decoder) | 347M | Text Generation |

| BioGPT-Large | GPT-2 (Decoder) | 1.5B | Question Answering |

| BioBERT | BERT (Encoder) | 110M | Named Entity Recognition |

| PubMedBERT | BERT (Encoder) | 110M | Text Classification |

| BioMedLM | GPT-2 (Decoder) | 2.7B | Medical QA |

BioMedLM offers a larger 2.7 billion-parameter alternative. However, BioGPT-Large demonstrates competitive PubMedQA performance despite having fewer parameters, validating the effectiveness of focused domain training.

BioGPT in the Healthcare AI Market

The global AI healthcare market reached approximately $26-29 billion in 2024. Analysts project growth to $500-674 billion by 2033-2034, representing a compound annual growth rate between 37% and 47%.

The natural language processing segment demonstrates a 36.5% CAGR, driven by applications in clinical documentation and medical literature analysis. North America maintains market leadership with a 45-54% share, while the U.S. market alone reached $8.41-11.57 billion in 2024.

| Market Metric | Value |

|---|---|

| Global AI Healthcare Market (2024) | $26.57 – $29.01 Billion |

| Projected Market Size (2033-2034) | $505 – $674 Billion |

| Market CAGR (2025-2033) | 37% – 47% |

| NLP Segment Growth Rate | 36.5% |

| Deep Learning Market Share (2024) | 40% |

| North America Market Share | 45% – 54% |

Deep learning technologies captured 40% of the AI healthcare market in 2024. BioGPT operates within this expanding ecosystem, supporting applications ranging from clinical decision support to automated literature review systems.

FAQ

How many parameters does BioGPT have?

BioGPT base contains 347 million parameters, while BioGPT-Large scales to 1.5 billion parameters. Both variants utilize 24 transformer layers with 1,024 hidden units and 16 attention heads across the architecture.

What dataset was BioGPT trained on?

Microsoft trained BioGPT on 15 million PubMed abstracts spanning publications from the 1960s through 2021. The training corpus provided comprehensive coverage of biomedical terminology and research literature accumulated over six decades.

How accurate is BioGPT on biomedical question answering?

BioGPT achieves 78.2% accuracy on PubMedQA benchmarks, while BioGPT-Large reaches 81% accuracy. These scores surpass larger general-purpose models including Flan-PaLM (540B parameters) at 79% and Meta’s Galactica at 77.6%.

Is BioGPT open source?

Yes, Microsoft released BioGPT under the MIT license, allowing free use, modification, and distribution for both research and commercial applications without restrictions on deployment or monetization.

How many downloads does BioGPT get monthly?

BioGPT receives approximately 45,315 monthly downloads through Hugging Face as of December 2025, indicating sustained adoption across research and development communities worldwide.

Sources:

Briefings in Bioinformatics – BioGPT Research Paper

GitHub – Microsoft BioGPT Repository