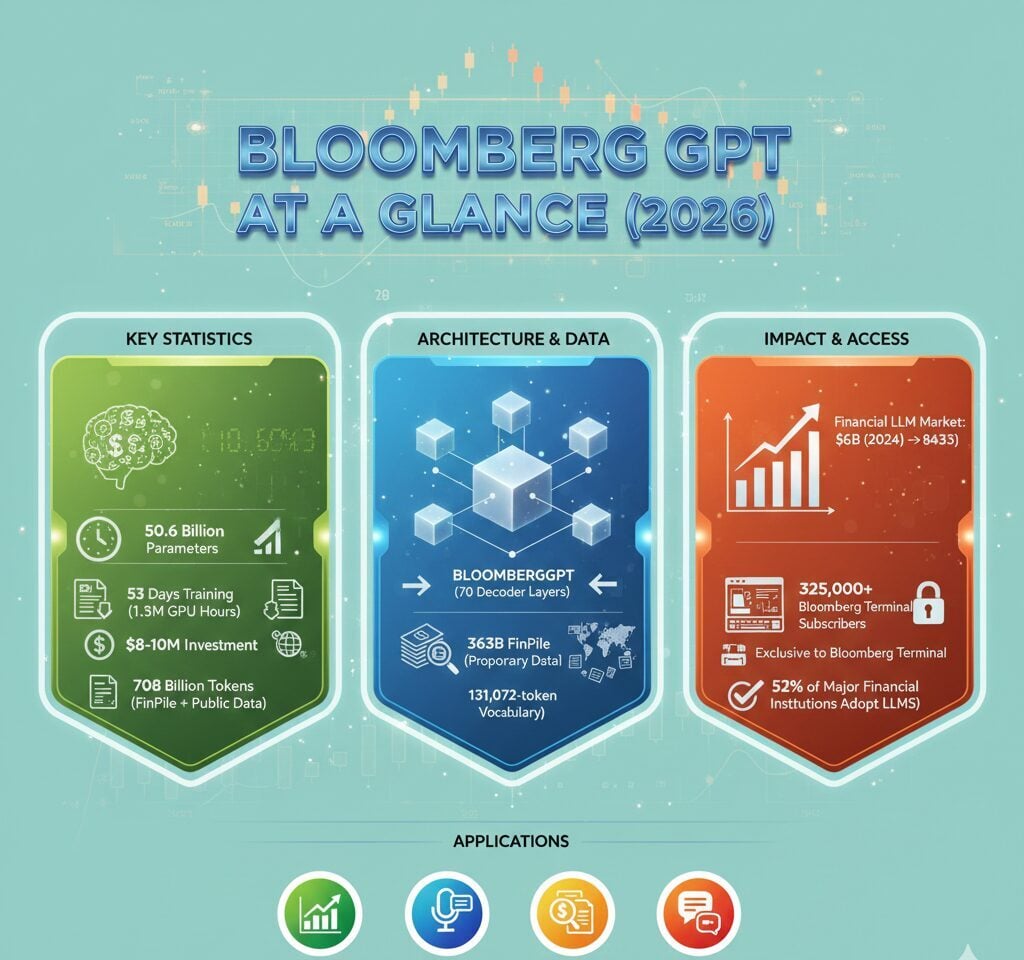

BloombergGPT reached 50.6 billion parameters and consumed 1.3 million GPU hours during its 53-day training period, representing one of the most ambitious domain-specific language model projects in financial services. Bloomberg invested between $8 million and $10 million to develop this financial AI model, training it on 708 billion tokens that combined 363 billion tokens of proprietary financial data with 345 billion tokens from public datasets. The model currently operates within Bloomberg Terminal, serving over 325,000 subscribers who pay $30,000 annually for access to the platform.

BloombergGPT Key Statistics

- BloombergGPT contains 50.6 billion parameters across 70 transformer decoder layers, making it one of the largest domain-specific language models as of 2026.

- The model trained on 708 billion tokens over 53 days using 512 NVIDIA A100 40GB GPUs, consuming an estimated $2.6 to $3 million in compute costs alone.

- Bloomberg created FinPile, a proprietary dataset with 363 billion tokens of financial data spanning documents from 2007 to 2023, representing approximately 200 times the size of English Wikipedia.

- The financial LLM market reached $6.02 billion in 2024 and projects to hit $84.25 billion by 2033, growing at a compound annual growth rate of 34.07 percent.

- Research from Queen’s University showed GPT-4 outperformed BloombergGPT on several financial benchmarks despite having no specialized financial training, achieving 68.79 percent on FinQA zero-shot tests.

BloombergGPT Model Architecture and Scale

BloombergGPT follows a decoder-only causal language model structure based on BLOOM, featuring 70 transformer decoder layers with ALiBi positional encoding. The architecture utilizes 40 attention heads and a hidden dimension of 7,680.

The model handles a context length of 2,048 tokens with a vocabulary size of 131,072 tokens, significantly larger than standard tokenizers. This expanded vocabulary captures financial terminology more effectively than general-purpose models.

| BloombergGPT Specification | Value |

|---|---|

| Total Parameters | 50.6 Billion |

| Transformer Layers | 70 |

| Attention Heads | 40 |

| Hidden Dimension | 7,680 |

| Context Length | 2,048 tokens |

| Vocabulary Size | 131,072 tokens |

| Head Dimension | 192 |

The 50-billion parameter configuration optimized performance within Bloomberg’s compute budget while ensuring comprehensive training across all available tokens. Designers applied Chinchilla scaling laws to balance model size with training data volume.

BloombergGPT Training Data Composition

Bloomberg constructed the FinPile dataset specifically for training BloombergGPT, combining 363 billion tokens of proprietary financial data with 345 billion tokens from public sources. This mixed approach enabled strong performance on both financial and general NLP tasks.

The FinPile dataset includes earnings reports, market analyses, company filings, press releases, and financial news from Bloomberg’s archives dating back to 2007. Public datasets comprised C4, The Pile, and Wikipedia content.

| Training Data Category | Tokens (Billions) | Percentage |

|---|---|---|

| FinPile (Financial Data) | 363 | 51.27% |

| Public Datasets | 345 | 48.73% |

| Total Training Corpus | 708 | 100% |

FinPile Dataset Structure

The FinPile dataset represents Bloomberg’s four decades of curated financial content, with web sources comprising 42.01 percent of the training share. News sources from non-Bloomberg outlets contributed 5.31 percent of the dataset.

Bloomberg included company filings from SEC documents, earnings call transcripts, press releases, and financial social media content. The data curation process removed markup, special formatting, and templates to ensure clean training data quality.

BloombergGPT Training Infrastructure and Investment

Bloomberg utilized Amazon Web Services infrastructure with 512 NVIDIA A100 40GB GPUs organized into 64 clusters of 8 GPUs each. The training process ran for 53 days, completing 139,200 training steps.

The project consumed 1.3 million GPU hours at an estimated compute cost between $2.6 million and $3 million. Total project investment reached $8 million to $10 million when including personnel and research costs.

| Training Metric | Value |

|---|---|

| Total GPU Hours | 1.3 Million |

| GPU Type | NVIDIA A100 40GB |

| Total GPUs Used | 512 |

| GPU Clusters | 64 (8 GPUs each) |

| Training Duration | 53 days |

| Training Steps | 139,200 |

| Estimated Compute Cost | $2.6 – $3 Million |

| Total Project Investment | $8 – $10 Million |

A nine-person development team worked on the project, with four engineers focused on modeling and data, one on compute optimization, and four covering evaluation and research. The team operated on Amazon SageMaker platform.

BloombergGPT Performance Benchmarks

BloombergGPT demonstrated strong performance against similarly sized open models on finance-specific NLP tasks, outperforming GPT-NeoX, OPT, and BLOOM in the 50-billion parameter category. The model showed competitive results on named entity recognition tasks.

Queen’s University research revealed GPT-4 outperformed BloombergGPT on several financial tasks despite lacking specialized financial training. GPT-4 achieved 68.79 percent accuracy on FinQA zero-shot tests compared to lower scores from BloombergGPT.

| Benchmark/Task | BloombergGPT Performance | Comparison |

|---|---|---|

| FinQA (Financial QA) | Lower than GPT-4 | GPT-4: 68.79% zero-shot |

| Finance-Specific NLP | Outperforms competitors | Best among 50B models |

| Named Entity Recognition | Competitive | Comparable to GPT-4 |

| Sentiment Analysis | Strong financial context | GPT-4 scored higher |

| Headline Classification | Domain-specific accuracy | GPT-4 outperformed |

The benchmark results influenced enterprise approaches to proprietary LLM development, with many reconsidering the value of domain-specific training versus leveraging frontier general models. This finding suggested scale can outweigh domain specialization in artificial intelligence applications.

Financial LLM Market Growth and Adoption

The global large language model market reached $6.02 billion in 2024 and projects to grow to $84.25 billion by 2033. This represents a compound annual growth rate of 34.07 percent over the forecast period.

Financial services represent a significant vertical for LLM adoption, with 52 percent of major financial institutions integrating LLM solutions as of 2025. Financial applications account for 21 percent of global LLM applications.

| LLM Market Metric | 2024/2025 Value |

|---|---|

| Global LLM Market Size (2024) | $6.02 Billion |

| Projected Market Size (2033) | $84.25 Billion |

| Market CAGR (2025-2033) | 34.07% |

| Financial Services Adoption | 52% of major institutions |

| Financial LLM Market Share | 21% of global applications |

| Financial Analysts Using LLMs | 38% for earnings reports |

Bank of America reported 60 percent of clients use LLM technology for investment planning. An estimated 50 percent of digital work in financial institutions will be automated using LLM models by 2025.

Financial services applications expanded to encompass real-time risk modeling, customer analytics, regulatory automation, and fraud detection. Survey data shows 58 percent of financial companies utilize advanced AI technologies in their operations.

BloombergGPT Integration and Access

BloombergGPT remains proprietary and closed-source, integrated exclusively within Bloomberg’s internal infrastructure. The model powers several Bloomberg Terminal features including Bloomberg Search, report generation, narrative analysis, and financial modeling workflows.

Bloomberg Terminal serves over 325,000 subscribers globally at a price point of $30,000 or more per user annually. Terminal revenue represents over 85 percent of Bloomberg’s total revenue.

| BloombergGPT Access Metric | Status |

|---|---|

| Open Source Availability | Not available |

| Public API Access | None |

| Bloomberg Terminal Price | $30,000+ per user/year |

| Terminal Revenue Share | 85%+ of Bloomberg revenue |

| Model Weights Release | Not released |

| Hugging Face/GitHub Presence | None |

The model functions as an internal system layer rather than a standalone product, embedded within Bloomberg’s existing enterprise infrastructure. The last official updates about BloombergGPT came in 2023, with the system now operating to enhance Terminal functionalities.

Bloomberg has not released model weights or provided public API access, keeping the technology exclusive to Bloomberg Terminal subscribers. This contrasts with the growing trend toward open-source AI models in the broader technology sector.

FAQ

How many parameters does BloombergGPT have?

BloombergGPT contains 50.6 billion parameters across 70 transformer decoder layers, making it one of the largest domain-specific language models developed for financial services as of 2026.

How much did it cost to train BloombergGPT?

Bloomberg invested between $8 million and $10 million in total project costs, with compute expenses alone ranging from $2.6 million to $3 million for 1.3 million GPU hours over 53 days.

Is BloombergGPT available to the public?

No, BloombergGPT remains proprietary and closed-source with no public API access. The model is exclusively integrated within Bloomberg Terminal, which costs $30,000 or more per user annually.

How does BloombergGPT compare to GPT-4?

Research from Queen’s University showed GPT-4 outperformed BloombergGPT on several financial benchmarks despite having no specialized financial training, achieving 68.79 percent accuracy on FinQA zero-shot tests compared to BloombergGPT’s lower scores.

What is the FinPile dataset used to train BloombergGPT?

FinPile is Bloomberg’s proprietary dataset containing 363 billion tokens of financial data from 2007 to 2023, including earnings reports, SEC filings, market analyses, and financial news representing 200 times the size of English Wikipedia.