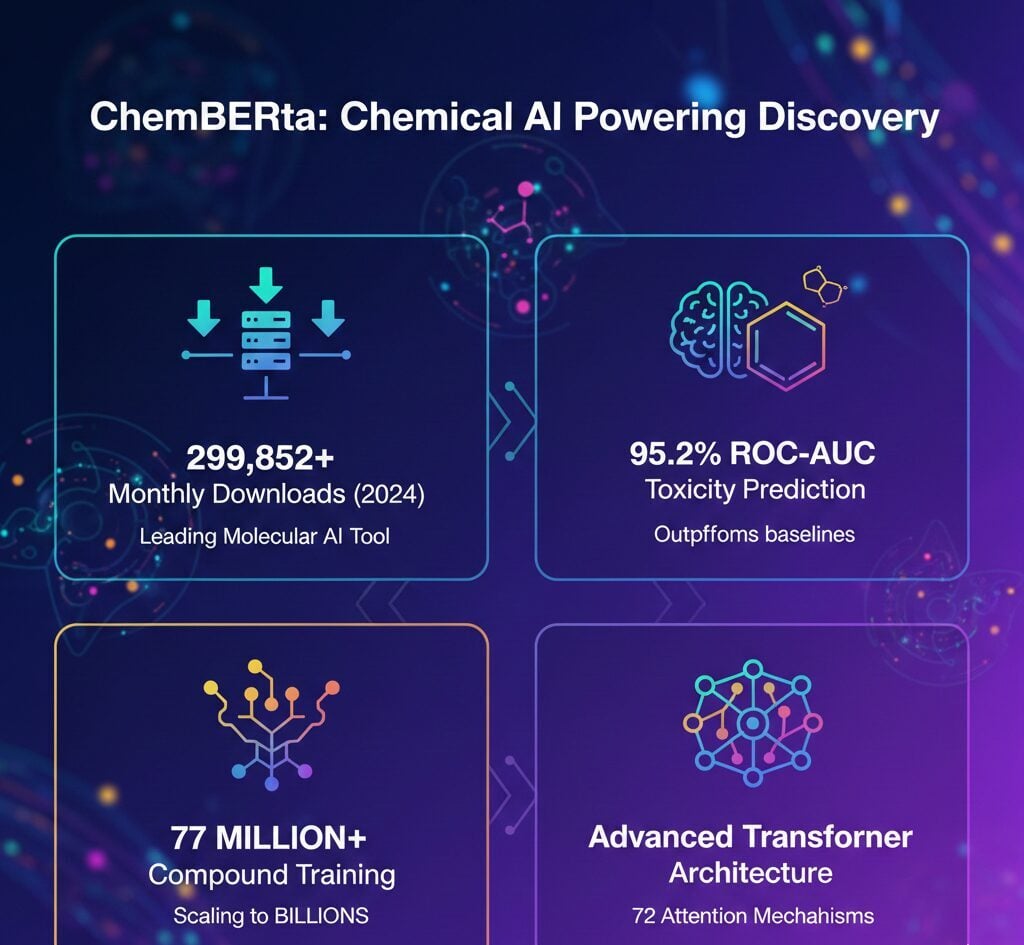

ChemBERTa models recorded 299,852 combined monthly downloads across Hugging Face repositories as of 2024, establishing the transformer-based architecture as a leading tool for molecular property prediction. The model achieved 95.2% ROC-AUC on toxicity classification benchmarks and demonstrated superior performance with 77 million compound training datasets. ChemBERTa-3 introduced billion-molecule scale training capabilities utilizing AWS and HPC infrastructure, marking a significant advancement in chemical foundation models for pharmaceutical research applications.

ChemBERTa Key Statistics

- ChemBERTa models recorded 299,852 combined monthly downloads across three primary variants on Hugging Face as of 2024.

- The model achieved 95.2% ROC-AUC on ClinTox toxicity classification benchmarks, outperforming the Chemprop baseline at 90.5%.

- ChemBERTa-2 training scaled from 100,000 to 77 million PubChem compounds, resulting in average ROC-AUC improvements of +0.110 across downstream tasks.

- The architecture employs 72 distinct attention mechanisms across 12 attention heads and 6 transformer layers for pattern recognition in SMILES molecular representations.

- ChemBERTa combined with ProtBert achieved 0.973 AUC on drug-target interaction prediction, demonstrating 2-4% ROC-AUC improvements over traditional fingerprint-based methods.

ChemBERTa Download and Adoption Statistics

ChemBERTa models hosted on Hugging Face demonstrated substantial adoption within the research community. The platform recorded 299,852 combined monthly downloads across three primary model variants.

The ChemBERTa-zinc-base-v1 variant led adoption metrics with 159,314 monthly downloads. DeepChem/ChemBERTa-77M-MTR recorded 91,063 downloads, while DeepChem/ChemBERTa-77M-MLM reached 49,475 downloads.

The ChemBERTa-zinc-base-v1 variant powered 11 active Hugging Face Spaces, including applications for PROTAC splitting, molecular property prediction, and drug-target analysis. Community developers created 41 finetuned models based on ChemBERTa architectures.

| Model Name | Monthly Downloads | Finetuned Models |

|---|---|---|

| seyonec/ChemBERTa-zinc-base-v1 | 159,314 | 20 |

| DeepChem/ChemBERTa-77M-MTR | 91,063 | 14 |

| DeepChem/ChemBERTa-77M-MLM | 49,475 | 7 |

ChemBERTa Training Dataset Evolution

ChemBERTa models underwent pretraining on progressively larger molecular datasets to enhance representation learning capabilities. Scaling experiments demonstrated consistent performance improvements with increased data volume.

The original ChemBERTa model trained on PubChem 10M containing 10 million SMILES representations. ChemBERTa-2 variants introduced three configuration options with datasets ranging from 5 million to 77 million compounds.

The 77 million compound dataset from PubChem represented one of the largest datasets used for molecular pretraining. Scaling from 100,000 to 10 million compounds resulted in average ROC-AUC improvements of +0.110 across downstream tasks.

ChemBERTa-3 introduced training capabilities on ZINC20, a dataset containing billions of molecules. The framework leveraged both AWS-based Ray deployments and on-premise high-performance computing clusters to support processing requirements.

ChemBERTa Architecture and Model Specifications

ChemBERTa employs a bidirectional training context derived from the RoBERTa transformer implementation. The model architecture incorporates specific configurations optimized for processing SMILES strings rather than natural language text.

| Architecture Component | Specification |

|---|---|

| Attention Heads | 12 |

| Transformer Layers | 6 |

| Total Attention Mechanisms | 72 |

| Parameter Range (ChemBERTa-2) | 5M – 46M |

| Base Architecture | RoBERTa |

| Default Tokenizer | Byte-Pair Encoding (BPE) |

The 72 distinct attention mechanisms enabled ChemBERTa to identify chemically meaningful patterns within molecular structures. Attention visualization studies demonstrated that specific heads focus on functional groups such as hydroxyl and carbonyl groups, while others specialize in recognizing aromatic ring structures.

ChemBERTa Benchmark Performance Results

MoleculeNet benchmarks evaluated ChemBERTa-2 configurations across classification and regression tasks using scaffold splitting methodology. The model demonstrated competitive performance on toxicity-related benchmarks.

ChemBERTa achieved 95.2% ROC-AUC on ClinTox toxicity classification with 1,491 compounds, outperforming the Chemprop baseline at 90.5%. The model ranked first among natural language processing models on Tox21 toxicity benchmarks with 7,831 compounds.

MolPROP fusion models combined ChemBERTa-2-MLM with graph neural networks to achieve the 95.2% ROC-AUC performance on ClinTox. The HIV inhibition benchmark recorded 79.3% accuracy using the MLM variant with 41,127 compounds.

| Task (Classification) | Dataset Size | Best ChemBERTa ROC-AUC |

|---|---|---|

| ClinTox (Toxicity) | 1,491 compounds | 95.2% ± 3.4% |

| BACE (Inhibition) | 1,513 compounds | Competitive with SOTA |

| BBBP (Penetration) | 2,039 compounds | Competitive with SOTA |

| Tox21 (Toxicity) | 7,831 compounds | Ranked 1st among NLP models |

| HIV (Inhibition) | 41,127 compounds | 79.3% (MLM variant) |

ChemBERTa Drug Discovery Applications

ChemBERTa embeddings integrated into pharmaceutical research pipelines for ADMET prediction, toxicity screening, and drug-target interaction analysis. The model demonstrated practical value in pharmaceutical research applications.

ChemBERTa combined with ProtBert achieved 0.973 AUC on drug-target interaction prediction tasks. The model demonstrated 2-4% ROC-AUC improvements over traditional fingerprint-based methods for toxicity prediction.

Ionic liquid toxicity prediction using convolutional neural networks with ChemBERTa embeddings recorded 0.865 R-squared and 0.390 RMSE. XGBoost implementations achieved 0.824 R-squared on the same tasks.

ChemBERTa Pretraining Methods

ChemBERTa-2 introduced two distinct pretraining methodologies with measurable performance differences. Models pretrained with Multi-Task Regression consistently outperformed Masked Language Modeling variants on downstream finetuning tasks.

MLM pretraining focused on predicting masked SMILES tokens with faster training speeds. MTR pretraining predicted 200 RDKit properties with slower training due to 200-element labels but delivered superior performance on most tasks.

| Pretraining Method | Objective | Training Speed | Downstream Performance |

|---|---|---|---|

| Masked Language Modeling (MLM) | Predict masked SMILES tokens | Faster | Good baseline |

| Multi-Task Regression (MTR) | Predict 200 RDKit properties | Slower | Superior on most tasks |

ChemBERTa Token Prediction Accuracy

ChemBERTa demonstrated strong capabilities in predicting masked tokens within SMILES molecular representations. The model showed learned understanding of chemical syntax without explicit supervision.

Testing on benzene molecule representations showed ChemBERTa correctly predicted the masked double carbon bond token with 96.94% confidence. Training on ZINC100K for 5 epochs reached a final loss of 0.398.

FAQ

How many monthly downloads does ChemBERTa receive?

ChemBERTa models recorded 299,852 combined monthly downloads across Hugging Face repositories as of 2024. The ChemBERTa-zinc-base-v1 variant led with 159,314 downloads, followed by DeepChem/ChemBERTa-77M-MTR with 91,063 downloads and DeepChem/ChemBERTa-77M-MLM with 49,475 downloads.

What is ChemBERTa’s best benchmark performance?

ChemBERTa achieved 95.2% ROC-AUC on ClinTox toxicity classification benchmarks with 1,491 compounds, outperforming the Chemprop baseline at 90.5%. The model also ranked first among NLP models on Tox21 toxicity benchmarks and achieved 0.973 AUC on drug-target interaction prediction tasks when combined with ProtBert.

How large is ChemBERTa’s training dataset?

ChemBERTa-2 trained on up to 77 million compounds from PubChem, representing one of the largest datasets used for molecular pretraining. ChemBERTa-3 introduced training capabilities on ZINC20 containing billions of molecules. Scaling from 100,000 to 10 million compounds resulted in average ROC-AUC improvements of +0.110 across downstream tasks.

What architecture does ChemBERTa use?

ChemBERTa employs 12 attention heads and 6 transformer layers creating 72 total attention mechanisms. The model uses RoBERTa as its base architecture with Byte-Pair Encoding tokenization. ChemBERTa-2 parameter counts range from 5 million to 46 million depending on the model configuration.

Which pretraining method performs better for ChemBERTa?

Multi-Task Regression pretraining consistently outperformed Masked Language Modeling on downstream finetuning tasks. MTR predicts 200 RDKit properties but trains slower due to 200-element labels. MLM predicts masked SMILES tokens with faster training speeds and provides a good baseline. MLM pretraining loss correlates strongly with MTR performance for efficient hyperparameter search.