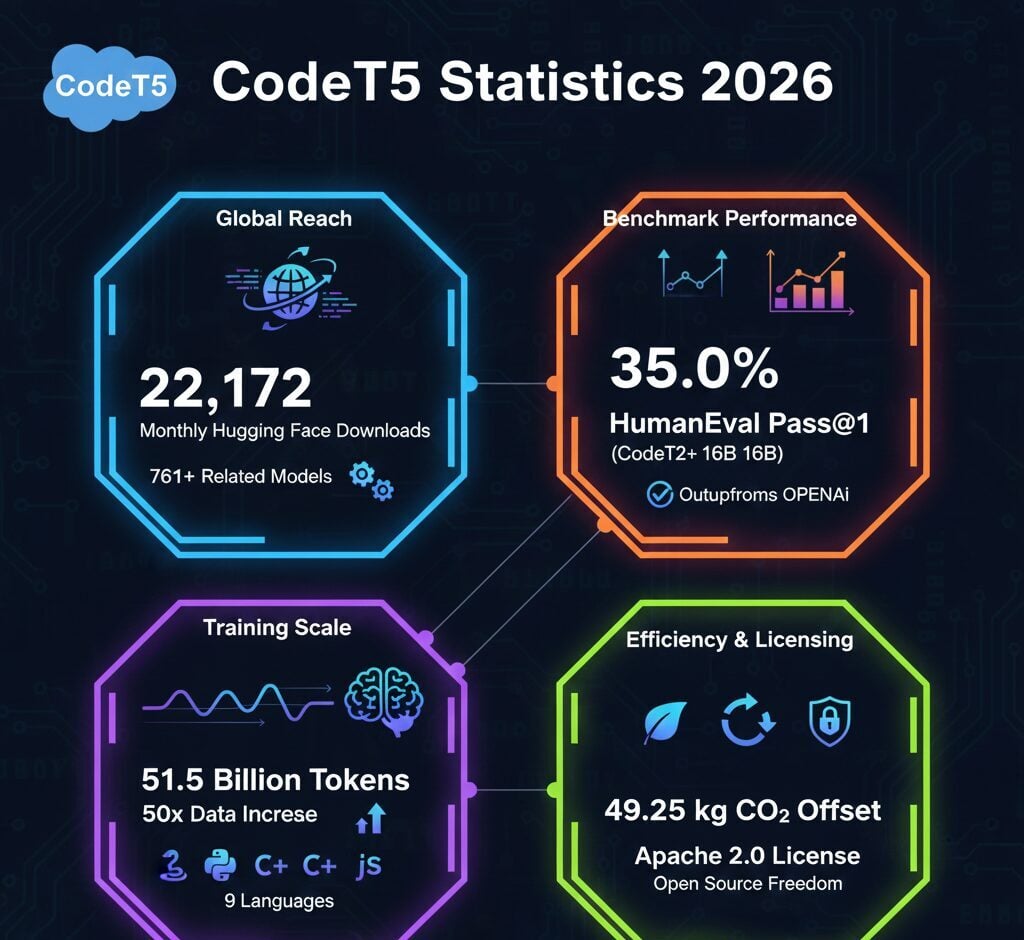

CodeT5-base recorded 22,172 monthly downloads on Hugging Face as of 2026, cementing its position as a foundational model in the open-source code intelligence space. Developed by Salesforce Research, the encoder-decoder transformer family spans eight variants from 60 million to 16 billion parameters. The CodeT5+ iteration trained on 51.5 billion tokens achieved 35.0% pass@1 on HumanEval benchmarks, outperforming OpenAI’s code-cushman-001 model while maintaining complete Apache 2.0 licensing.

CodeT5 Statistics Key Highlights

- CodeT5-base generates 22,172 monthly downloads on Hugging Face with 761+ related models deployed across the platform as of 2026.

- The instruction-tuned CodeT5+ 16B variant achieved 35.0% pass@1 and 54.5% pass@10 on HumanEval benchmarks in zero-shot settings.

- CodeT5+ training utilized 51.5 billion tokens, representing a 50x scale increase from the original CodeSearchNet corpus of 8.35 million instances.

- The CodeT5+ 770M model matched performance of models 8-80x larger, achieving 15.5% pass@1 comparable to PaLM 62B and GPT-NeoX 20B.

- CodeT5-base spawned 86 finetuned derivative models and 17 adapter models, demonstrating widespread adoption for specialized applications.

CodeT5 Model Architecture and Parameter Distribution

The CodeT5 family encompasses eight distinct variants optimized for different computational requirements. CodeT5-small operates with 60 million parameters, while the flagship CodeT5+ 16B model scales to 16 billion parameters.

Salesforce released the original three variants in 2021, followed by five CodeT5+ models in 2023. The newer generation introduced shallow encoder and deep decoder architectures for the 2B, 6B, and 16B variants.

| Model Variant | Parameters | Architecture Type | Release Year |

|---|---|---|---|

| CodeT5-small | 60M | Encoder-Decoder | 2021 |

| CodeT5-base | 220M | Encoder-Decoder | 2021 |

| CodeT5-large | 770M | Encoder-Decoder | 2021 |

| CodeT5+ 220M | 220M | Flexible Encoder-Decoder | 2023 |

| CodeT5+ 770M | 770M | Flexible Encoder-Decoder | 2023 |

| CodeT5+ 2B | 2B | Shallow Encoder, Deep Decoder | 2023 |

| CodeT5+ 6B | 6B | Shallow Encoder, Deep Decoder | 2023 |

| CodeT5+ 16B | 16B | Shallow Encoder, Deep Decoder | 2023 |

CodeT5+ introduced flexible operation modes enabling encoder-only, decoder-only, or full encoder-decoder configurations. This architecture allows practitioners to optimize model deployment without maintaining separate instances for different tasks.

CodeT5 Training Data Scale and Composition

CodeT5+ training leveraged 51.5 billion tokens from GitHub repositories, marking a substantial expansion from the original model. The original CodeT5 utilized 8.35 million training instances from the CodeSearchNet dataset.

The training corpus includes only permissively licensed code under MIT, Apache-2.0, BSD-3-Clause, BSD-2-Clause, CC0-1.0, Unlicense, and ISC licenses. This licensing approach enables commercial deployment without legal restrictions.

CodeT5-large underwent 150 pretraining epochs on the CodeSearchNet corpus. The CodeT5+ family implemented variable epoch counts across different pretraining stages to optimize learning efficiency.

Programming Language Coverage

CodeT5 supports nine programming languages spanning the most widely used development ecosystems. The original model covered eight languages, with C++ added in the CodeT5+ release.

| Language | CodeT5 Support | CodeT5+ Support |

|---|---|---|

| Python | Yes | Yes |

| Java | Yes | Yes |

| JavaScript | Yes | Yes |

| Go | Yes | Yes |

| Ruby | Yes | Yes |

| PHP | Yes | Yes |

| C | Yes | Yes |

| C++ | No | Yes |

| C# | Yes | Yes |

The CodeSearchNet dataset provided coverage for Ruby, JavaScript, Go, Python, Java, and PHP. Salesforce collected additional C and C# datasets from BigQuery to expand language support in the original release.

CodeT5 HumanEval Benchmark Performance

InstructCodeT5+ 16B achieved 35.0% pass@1 accuracy on HumanEval zero-shot text-to-code generation tasks. With CodeT test generation augmentation, performance increased to 42.9% pass@1 and 67.8% pass@10.

The CodeT5+ 770M variant matched models significantly larger in parameter count. At 15.5% pass@1, it performed comparably to Incoder 6B, GPT-NeoX 20B, and PaLM 62B despite having 8-80x fewer parameters.

| Model | Pass@1 | Pass@10 | Setting |

|---|---|---|---|

| InstructCodeT5+ 16B | 35.0% | 54.5% | Zero-shot |

| InstructCodeT5+ 16B + CodeT | 42.9% | 67.8% | Zero-shot + Test Generation |

| CodeT5+ 770M-py | 15.5% | — | Zero-shot |

| Incoder 6B | 15.2% | — | Zero-shot |

| GPT-NeoX 20B | 15.4% | — | Zero-shot |

| PaLM 62B | 15.9% | — | Zero-shot |

| OpenAI code-cushman-001 | 33.5% | — | Zero-shot |

The 16B model surpassed OpenAI’s closed-source code-cushman-001, which achieved 33.5% pass@1. This marked state-of-the-art performance among open-source code language models at release time.

CodeT5 Mathematical Programming Capabilities

CodeT5+ 770M achieved 87.4% pass@80 on MathQA-Python benchmarks under finetuning evaluation. On GSM8K-Python tasks, the model recorded 73.8% pass@100 accuracy.

These results exceeded performance of models with up to 137 billion parameters. The efficiency demonstrates CodeT5+’s architectural optimization for mathematical reasoning tasks requiring Python code generation.

| Benchmark | Model | Performance | Setting |

|---|---|---|---|

| MathQA-Python | CodeT5+ 770M | 87.4% pass@80 | Finetuned |

| GSM8K-Python | CodeT5+ 770M | 73.8% pass@100 | Finetuned |

The sub-billion parameter model’s performance on mathematical programming tasks represents a significant efficiency achievement in the code intelligence domain. Both benchmarks evaluate the model’s ability to generate correct Python programs solving mathematical word problems.

CodeT5 Hugging Face Adoption Metrics

CodeT5-base generated 22,172 monthly downloads on Hugging Face as of 2026. The model accumulated 132 community likes and powers 36 dependent spaces on the platform.

Developers created 86 finetuned models derived from CodeT5-base for specialized applications. An additional 17 adapter models extend the base model’s capabilities for specific domains.

The total CodeT5 family includes 761+ models on Hugging Face Hub. This ecosystem demonstrates the model’s value as a foundation for code intelligence research and commercial applications.

Monthly download volume reflects sustained interest from both research institutions and development teams. The derivative model count indicates active community engagement in extending CodeT5’s capabilities.

CodeT5 Downstream Task Performance

CodeT5+ showed improvements across 20+ code-related benchmarks in zero-shot, finetuning, and instruction-tuning evaluations. The model achieved state-of-the-art results on multiple tasks spanning code understanding, generation, and completion.

Text-to-code retrieval tasks showed an average +3.2 MRR improvement across eight benchmarks. Line-level code completion recorded +2.1 average exact match gains across two tasks.

| Task Category | Number of Benchmarks | Improvement Over Baseline |

|---|---|---|

| Text-to-Code Retrieval | 8 tasks | +3.2 avg. MRR |

| Line-Level Code Completion | 2 tasks | +2.1 avg. Exact Match |

| Retrieval-Augmented Code Generation | 2 tasks | +5.8 avg. BLEU-4 |

Retrieval-augmented code generation demonstrated the strongest performance gains at +5.8 average BLEU-4 score improvement. These metrics span two specialized benchmarks evaluating the model’s ability to generate code using retrieved context.

CodeT5 Environmental Impact and Training Costs

Training CodeT5-base produced 49.25 kg of CO2 emissions on Google Cloud Platform infrastructure. The provider completely offset these emissions through carbon credit programs.

Open-source release of pretrained models eliminates repeated pretraining by the research community. This distribution approach reduces the collective environmental footprint of code intelligence development.

| Metric | CodeT5-base |

|---|---|

| CO2 Emissions During Training | 49.25 kg |

| Carbon Offset Status | Fully offset by provider |

| Training Platform | Google Cloud Platform |

Salesforce documented computational costs to promote transparency in model development. The relatively modest carbon footprint reflects efficient training practices and infrastructure optimization.

CodeT5 Pretraining Objectives Evolution

The original CodeT5 employed four pretraining objectives including masked span prediction, identifier tagging, masked identifier prediction, and bimodal dual generation. The identifier tagging task achieved over 99% F1 score across all supported programming languages.

CodeT5+ expanded the pretraining framework with contrastive learning, text-code matching, causal language modeling, and instruction tuning. These additions addressed pretrain-finetune discrepancy while enabling richer representation learning.

The diverse objective mixture allows CodeT5+ to learn from both unimodal code data and bimodal code-text pairs. This approach improves the model’s ability to understand relationships between natural language descriptions and corresponding code implementations.

CodeT5 Licensing and Distribution Model

CodeT5 operates under Apache 2.0 licensing, enabling unrestricted commercial and research deployment. Organizations can implement the model without vendor lock-in or licensing fees.

Salesforce archived the official GitHub repository in May 2025. Model weights remain accessible through Hugging Face, and community forks continue active development and maintenance.

| Aspect | Details |

|---|---|

| License Type | Apache 2.0 |

| Repository Status (May 2025) | Archived |

| Hugging Face Availability | Active |

| Community Forks | Active maintenance |

The open licensing approach facilitated widespread adoption across enterprise and academic environments. Developers can modify, distribute, and deploy CodeT5 models without seeking additional permissions or paying licensing fees.

FAQ

How many monthly downloads does CodeT5-base receive?

CodeT5-base generates 22,172 monthly downloads on Hugging Face as of 2026, reflecting sustained interest from the research and development community.

What pass@1 accuracy did CodeT5+ 16B achieve on HumanEval?

InstructCodeT5+ 16B achieved 35.0% pass@1 accuracy on HumanEval benchmarks in zero-shot settings, surpassing OpenAI’s code-cushman-001 model which scored 33.5%.

How much training data did CodeT5+ use?

CodeT5+ trained on 51.5 billion tokens from GitHub repositories, representing a 50x scale increase from the original CodeSearchNet corpus of 8.35 million instances.

Which programming languages does CodeT5 support?

CodeT5+ supports nine programming languages including Python, Java, JavaScript, Go, Ruby, PHP, C, C++, and C#. The original CodeT5 supported eight languages without C++.

What license does CodeT5 use?

CodeT5 operates under Apache 2.0 licensing, enabling unrestricted commercial and research deployment without vendor lock-in or licensing fees.

CodeT5 established itself as a foundational model in open-source code intelligence with 22,172 monthly downloads and 761+ derivative models deployed across Hugging Face. The instruction-tuned 16B variant’s 35.0% HumanEval pass@1 score demonstrated competitive performance against closed-source alternatives while maintaining complete Apache 2.0 licensing. Training on 51.5 billion tokens represented a 50x scale increase from the original CodeSearchNet dataset, with the CodeT5+ 770M variant achieving results comparable to models 80x larger. The model family’s architectural innovations and benchmark performances provide reference points for the code intelligence research community as the field continues advancing.

Sources:

Hugging Face CodeT5 Model Card

CodeT5+ Research Paper on arXiv