Key Stats

Mozilla DeepSpeech represents a significant milestone in open-source speech recognition technology. The project transformed automatic speech recognition by making powerful models accessible to developers worldwide through an open-source framework built on TensorFlow.

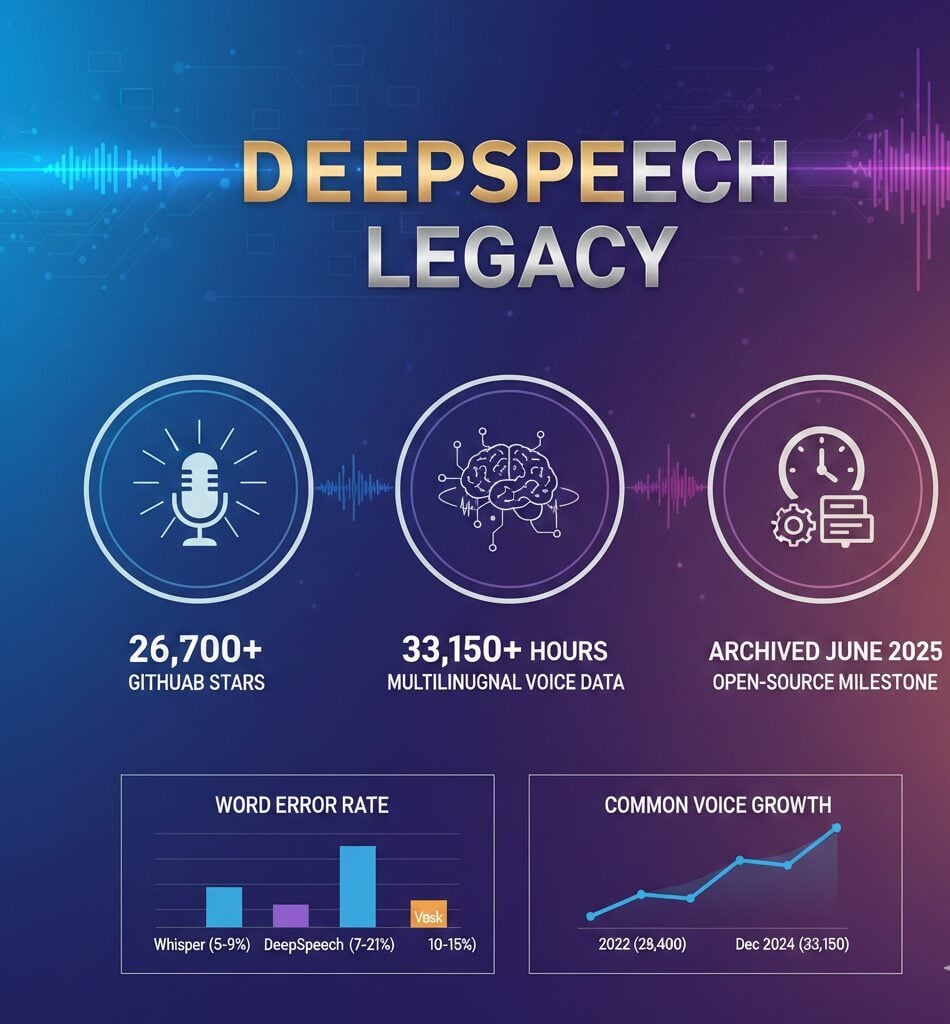

Launched in 2017 and officially archived in June 2025, DeepSpeech achieved remarkable adoption with over 26,700 GitHub stars. The technology demonstrated competitive performance with 7.06% word error rates on clean audio benchmarks.

DeepSpeech’s impact extends beyond its codebase through contributions to the Mozilla Common Voice dataset, which now contains over 33,150 hours of multilingual speech data. The project established foundational benchmarks for edge deployment and lightweight speech recognition solutions.

DeepSpeech History

DeepSpeech Co-founders

Brendan Eich co-founded Mozilla in 1998 and served as chief technology officer before briefly becoming CEO in 2014. He created JavaScript programming language and shaped Mozilla’s technology vision, including support for initiatives like DeepSpeech under Mozilla Research.

Mitchell Baker co-founded Mozilla with Brendan Eich in 1998 and serves as Executive Chairwoman. She provided strategic leadership for Mozilla Foundation and Corporation, overseeing initiatives including DeepSpeech and Common Voice projects aimed at democratizing speech technology.

The Mozilla Research machine learning team initiated and developed DeepSpeech starting in late 2017. This team implemented the architecture based on Baidu’s research, trained models using TensorFlow, and established the open-source framework that attracted thousands of contributors.

DeepSpeech Revenue

DeepSpeech operated as an open-source research project under Mozilla Foundation rather than generating direct revenue. Mozilla funded the initiative through its broader organizational resources derived from partnerships and services.

The project contributed value through ecosystem impact rather than monetization. DeepSpeech enabled developers to implement speech recognition in applications without licensing costs, supporting startups and enterprises implementing voice interfaces. The technology powered voice command systems, medical dictation applications, and industrial automation solutions across diverse sectors.

Mozilla’s investment in DeepSpeech aligned with its mission to promote openness and accessibility on the internet. The organization measured success through adoption metrics including 26,700 GitHub stars, 529 dependent projects, and contributions to the 33,150-hour Common Voice dataset rather than revenue generation.

DeepSpeech Acquisitions

Mozilla DeepSpeech did not pursue acquisitions as an open-source research project. The technology development followed an organic growth model focused on community contributions and collaborative development rather than corporate expansion strategies.

The project instead influenced the broader speech recognition ecosystem through knowledge sharing and open-source releases. Several commercial entities built upon DeepSpeech’s foundation, including Coqui AI, which created a fork called Coqui STT before discontinuing development in 2024.

Mozilla’s approach emphasized collaboration over consolidation. The organization partnered with universities, research institutions, and technology companies to advance speech recognition capabilities while maintaining the open-source nature of the project. This strategy enabled widespread adoption across embedded systems, IoT devices, and edge computing applications.

The Common Voice dataset developed alongside DeepSpeech represented Mozilla’s most significant contribution beyond the core technology. This growing collection of multilingual speech data continues supporting numerous open-source and commercial speech recognition projects worldwide, extending DeepSpeech’s impact beyond the original codebase.

DeepSpeech Marketcap

DeepSpeech operated as an open-source project under Mozilla Foundation without market capitalization. Mozilla Corporation, the for-profit subsidiary that funded DeepSpeech development, remains privately held without public stock trading.

The project’s value manifested through ecosystem contributions rather than market valuation. DeepSpeech influenced the $12.63 billion to $18.89 billion global speech recognition market in 2024, projected to reach $81-92 billion by 2032 with compound annual growth rates exceeding 20%.

DeepSpeech’s technological foundation enabled cost savings for companies implementing speech recognition without proprietary licensing fees. The open-source model democratized access to advanced speech technology, particularly benefiting resource-constrained organizations and researchers advancing the field.

DeepSpeech Competitors

DeepSpeech competed in the open-source automatic speech recognition space against both commercial platforms and alternative open-source solutions. The competitive landscape included proprietary services from major technology companies alongside community-driven projects.

The speech recognition market features established commercial players including Google Voice Search and Google Assistant, Amazon Alexa and AWS Transcribe, Apple Siri, and Microsoft Azure Speech. These proprietary solutions offer extensive language coverage and integration with broader ecosystems but require ongoing cloud costs and raise privacy concerns.

Open-source alternatives emerged as direct competitors to DeepSpeech, each targeting specific deployment scenarios and offering distinct advantages. OpenAI’s Whisper gained prominence through superior multilingual capabilities, while Vosk focused on offline mobile efficiency. The competitive dynamics shifted following DeepSpeech’s discontinuation in 2025, with active projects capturing market share from legacy implementations.

| Competitor | Type | Primary Strength | Status |

|---|---|---|---|

| OpenAI Whisper | Open Source | Multilingual robustness (97 languages) | Active |

| Vosk | Open Source | Offline mobile efficiency | Active |

| Wav2Vec 2.0 (Meta) | Open Source | Self-supervised learning architecture | Active |

| Coqui STT | Open Source | DeepSpeech successor fork | Discontinued (2024) |

| Google Cloud Speech-to-Text | Commercial | Cloud scalability and accuracy | Active |

| Amazon Transcribe | Commercial | AWS ecosystem integration | Active |

| Microsoft Azure Speech | Commercial | Enterprise features and support | Active |

| AssemblyAI | Commercial | Developer-focused API | Active |

| Speechmatics | Commercial | High-accuracy real-time transcription | Active |

| Rev.ai | Commercial | Human-in-the-loop accuracy | Active |