FinBERT has emerged as the specialized language model for financial sentiment analysis. Developed by Prosus AI and enhanced through academic research, this transformer-based model processes financial text with accuracy that exceeds general-purpose alternatives. The model analyzes corporate reports, earnings transcripts, and market communications.

FinBERT Download Statistics and Adoption Rates

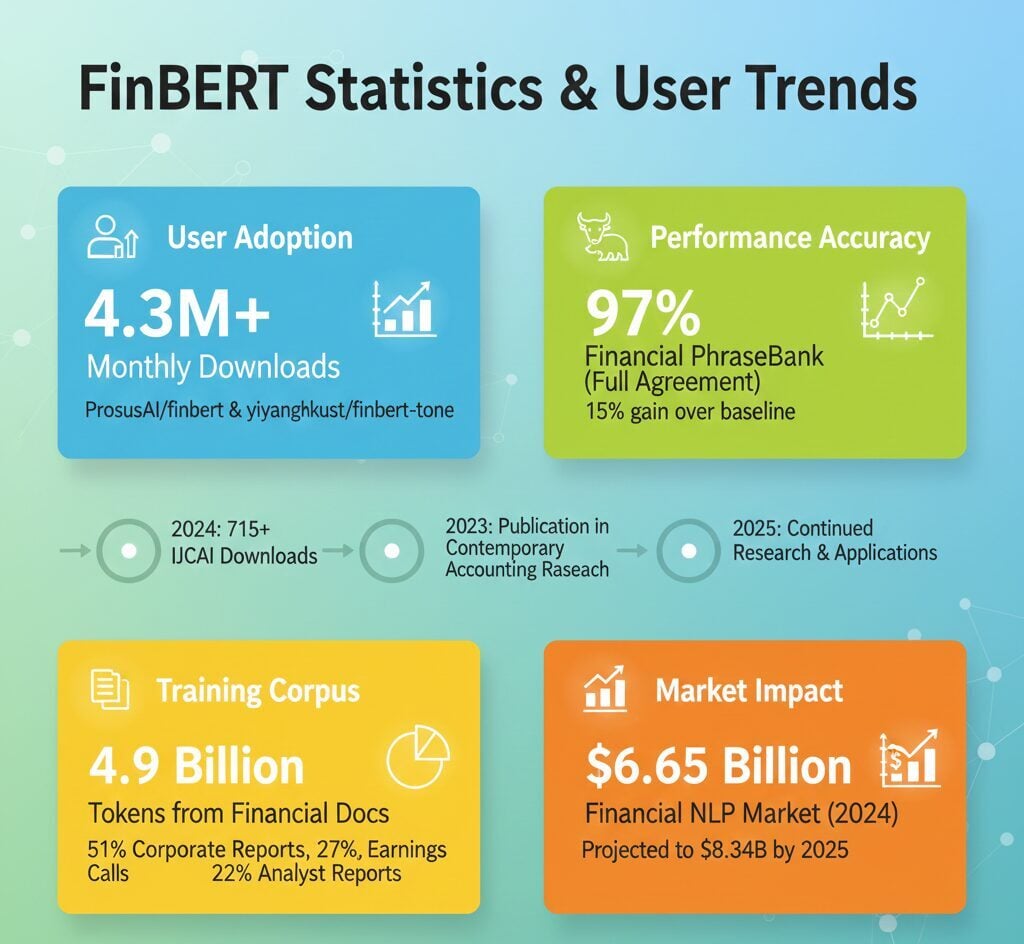

The primary FinBERT variants on Hugging Face demonstrate substantial enterprise adoption. ProsusAI/finbert recorded 2.3 million monthly downloads as of 2024. The yiyanghkust/finbert-tone variant reached 2.1 million monthly downloads.

Combined monthly downloads exceed 4.3 million across both models. These figures position FinBERT as the preferred choice for financial sentiment tasks among NLP practitioners and technology companies.

The ProsusAI version spawned 74 fine-tuned derivatives and 3 quantized versions. The yiyanghkust variant, trained on 4.9 billion tokens from financial documents, generated 18 fine-tuned adaptations for specialized use cases.

FinBERT Performance Accuracy on Financial Benchmarks

Benchmark evaluations reveal FinBERT’s superiority over traditional approaches. The model achieved 97% accuracy on Financial PhraseBank sentences with full annotator agreement. This marks a 6 percentage point improvement over the previous state-of-the-art FinSSLX model.

On the complete Financial PhraseBank dataset with all annotations, FinBERT recorded 86% accuracy. This represents a 15 percentage point gain compared to the HSC baseline. Research from Contemporary Accounting Research showed FinBERT captures 18% more textual informativeness from earnings conference calls.

The model excels at distinguishing between positive and neutral sentiments. Only 5% of misclassifications occur between negative and positive categories, indicating strong polarity detection capabilities.

FinBERT Training Corpus and Model Architecture

FinBERT’s domain expertise stems from comprehensive training on financial communication data. The training corpus contained 4.9 billion tokens distributed across multiple source types.

Corporate reports including 10-K and 10-Q filings contributed 2.5 billion tokens, representing 51% of the total corpus. Earnings call transcripts provided 1.3 billion tokens at 27% of the dataset. Analyst reports accounted for 1.1 billion tokens, comprising 22% of training data.

| Training Source | Token Count | Percentage |

|---|---|---|

| Corporate Reports | 2.5 billion | 51% |

| Earnings Transcripts | 1.3 billion | 27% |

| Analyst Reports | 1.1 billion | 22% |

The fine-tuning process utilized 10,000 manually annotated sentences from analyst reports. These sentences were categorized across positive, negative, and neutral sentiment labels. The model architecture follows BERT’s bidirectional encoder structure with 110 million parameters.

FinBERT vs Other Models Performance Comparison

Head-to-head evaluations confirm FinBERT’s methodological advantages over alternative approaches. The model achieved 97% accuracy on financial sentiment classification, establishing it as the baseline for domain-specific tasks.

ULMFiT reached 93% accuracy, trailing FinBERT by 4 percentage points. General-purpose BERT recorded 89% accuracy, showing an 8 percentage point gap. LSTM networks achieved 84% accuracy, while convolutional neural networks reached 82%.

Traditional methods demonstrated larger performance gaps. Random Forest models achieved 78% accuracy, a 19 percentage point difference. The Loughran-McDonald dictionary method, previously the standard for financial text analysis, recorded 71% accuracy.

Domain-specific pre-training provides substantial benefits over general BERT. The performance gap widens further with smaller training datasets, where FinBERT maintained 81.3% accuracy using only 10% of training data.

Financial NLP Market Size and FinBERT Applications

FinBERT operates within a rapidly expanding natural language processing market. The NLP in finance market reached $6.65 billion in 2024, with projections indicating growth to $8.34 billion by 2025. This represents a 25.5% compound annual growth rate.

Sentiment analysis accounts for 31% of the financial NLP market, making it the largest segment. Risk management and fraud detection comprise 24% of applications. Customer service automation represents 19% of use cases. Similar trends are visible across major technology platforms that process financial data.

Financial institutions generate unstructured data at rates between 55-65% annually. This sustained growth creates continued demand for specialized language models like FinBERT. North America holds 36% of the market share, with software solutions accounting for 65% of industry spending.

FinBERT Industry Adoption by Financial Sector

Enterprise deployment patterns reveal primary applications across financial workflows. Hedge funds lead institutional adoption at 68%, utilizing FinBERT-derived sentiment scores as alpha signals for quantitative trading strategies.

Investment banks recorded 56% AI adoption rates, primarily for analyst report processing and market research. Asset management firms showed 48% adoption, focusing on ESG screening automation. Retail banking achieved 38% adoption for customer feedback analysis.

Research from Credgenics demonstrated that sentiment-driven approaches enable lending institutions to recover between 70% and 95% of bad debts. Collection rates increased by 15-20% through automated sentiment analysis. These advances mirror innovations from leading technology firms in machine learning applications.

FinBERT ESG Classification and Specialized Variants

Beyond sentiment analysis, FinBERT demonstrates effectiveness for Environmental, Social, and Governance text classification. The model achieved state-of-the-art performance on ESG discussion identification, outperforming traditional methods by 18%.

Specialized variants extend core capabilities to additional financial tasks. FinBERT-ESG focuses on sustainability reporting and ESG-aligned investment screening. FinBERT-FLS identifies forward-looking statements in regulatory disclosures. Both variants are publicly available through Hugging Face.

The model family addresses growing institutional demand for automated ESG analysis. Earnings call information extraction showed 18% more informativeness captured compared to baseline approaches. This capability supports compliance monitoring and regulatory document processing.

FinBERT Technical Specifications and Implementation

The model specifications reflect FinBERT’s foundation on BERT-base architecture with domain enhancements. The system contains 110 million parameters distributed across 12 attention heads. Hidden layer size measures 768 dimensions.

Output classifications include three categories: positive, negative, and neutral sentiment. FinBERT employs six pre-training tasks compared to two for standard BERT. This tripled contextual learning enables superior comprehension of financial terminology. The architecture principles resemble those used by major tech companies in their AI systems.

Model compatibility spans PyTorch, TensorFlow, and JAX frameworks. This facilitates integration across diverse machine learning infrastructure. The fine-tuning dataset comprised 10,000 annotated sentences from analyst reports.

FinBERT Research Impact and Academic Citations

Academic adoption metrics reflect FinBERT’s influence on computational finance research. The model recorded over 715 total downloads from the IJCAI repository, with 313 downloads in the most recent 12-month period.

Hugging Face community discussions generated 49 threads combined across variants. Fine-tuned derivative models totaled 92 across both primary versions. The primary publication appeared in Contemporary Accounting Research in 2023.

Research building upon FinBERT continued expanding through 2024 and 2025. Studies appeared in IEEE Big Data, ACM AI in Finance, and MDPI Electronics. The model serves as baseline comparison for emerging financial language models.

FAQs

What accuracy does FinBERT achieve on financial sentiment analysis?

FinBERT achieves 97% accuracy on Financial PhraseBank sentences with full annotator agreement, representing a 6 percentage point improvement over previous state-of-the-art models. On complete datasets with all annotations, accuracy reaches 86%.

How many tokens was FinBERT trained on?

FinBERT was pre-trained on 4.9 billion tokens from financial documents including 2.5 billion tokens from corporate reports, 1.3 billion from earnings transcripts, and 1.1 billion from analyst reports.

What is the monthly download volume for FinBERT models?

Combined monthly downloads exceed 4.3 million across the two primary FinBERT variants on Hugging Face. ProsusAI/finbert records 2.3 million monthly downloads, while yiyanghkust/finbert-tone reaches 2.1 million downloads.

Which industries use FinBERT most frequently?

Hedge funds lead adoption at 68%, followed by investment banks at 56%, asset management firms at 48%, and retail banking at 38%. Applications include trading signals, analyst report processing, and ESG screening.

How does FinBERT compare to general BERT on financial tasks?

FinBERT outperforms general BERT by 8 percentage points on financial sentiment tasks, achieving 97% versus 89% accuracy. Domain-specific pre-training provides significant advantages, particularly with smaller training datasets.