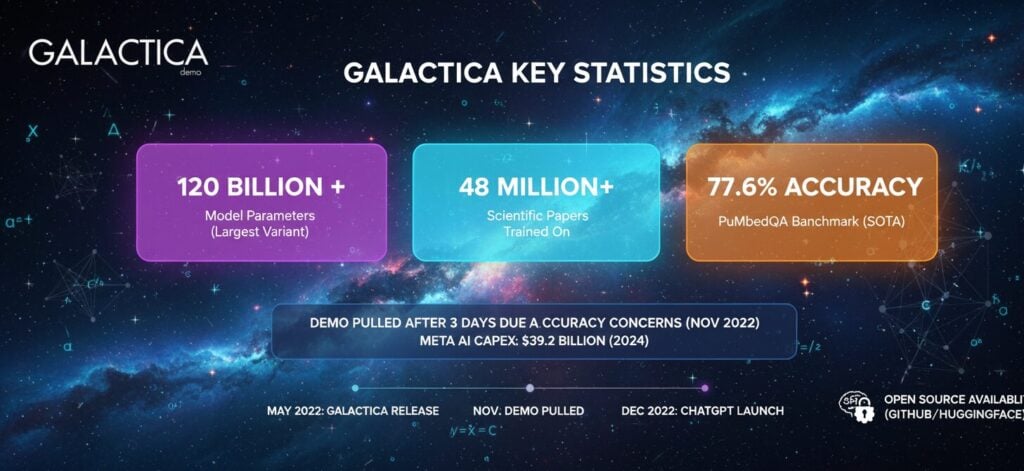

Meta’s Galactica reached 120 billion parameters and trained on 48 million scientific papers before its public demo was pulled after just three days in November 2022. The model outperformed GPT-3 on LaTeX equation tasks by 19.2 percentage points and set new records on medical question-answering benchmarks. This article examines the verified statistics behind Galactica’s architecture, performance, and lasting influence on Meta’s AI strategy.

Galactica Key Statistics

- Galactica trained on 48 million+ scientific papers and 106 billion tokens as of 2022

- The largest Galactica model contains 120 billion parameters

- Galactica achieved 77.6% accuracy on PubMedQA, setting a new state-of-the-art record

- Meta removed the public demo after 3 days following accuracy concerns

- Meta’s AI capital expenditure reached $39.2 billion in 2024, partly influenced by Galactica lessons

Galactica Training Data and Corpus

The training corpus, internally called “NatureBook,” contained over 48 million scientific papers, 360 million in-context citations, and 50 million unique references. Meta’s researchers curated this dataset specifically for scientific knowledge tasks rather than relying on web-crawled data.

The specialized corpus included 2 million code samples and 8 million lecture notes and textbooks. Training ran for approximately 450 billion tokens across 4.25 epochs without overfitting issues common to generic language models.

Galactica Model Architecture Statistics

Meta released Galactica as a family of six models ranging from 125 million to 120 billion parameters. The smallest variant, Galactica Mini, targeted lightweight applications while the 120 billion parameter flagship handled complex reasoning tasks.

| Model Variant | Parameters | Primary Use Case |

|---|---|---|

| Galactica Mini | 125 million | Lightweight applications |

| Galactica Base | 1.3 billion | Standard research tasks |

| Galactica Standard | 6.7 billion | Enhanced capabilities |

| Galactica Large | 30 billion | Complex reasoning |

| Galactica Huge | 120 billion | Maximum performance |

Training the 120 billion parameter model required 128 NVIDIA A100 80GB nodes. Meta optimized inference to run on a single A100 node, prioritizing accessibility for researchers. Performance scaled consistently with model size across all benchmark tasks.

Galactica Benchmark Performance

Galactica outperformed GPT-3 on LaTeX equation generation with a score of 68.2% compared to 49.0%. On the MATH benchmark, it achieved 20.4% accuracy versus PaLM 540B’s 8.8%, representing an 11.6 percentage point advantage.

The model set new state-of-the-art results on medical benchmarks including 77.6% on PubMedQA and 52.9% on MedMCQA Dev. Mathematical MMLU scores reached 41.3%, exceeding DeepMind’s Chinchilla by 5.6 percentage points.

Citation Prediction Accuracy

Citation prediction accuracy ranged from 36.6% to 69.1% depending on the evaluation dataset. Researchers noted a documented bias toward highly-cited papers over lesser-known work in the original technical paper.

Galactica Timeline and Public Response

Meta launched the Galactica public demo on November 15, 2022. Within hours, researchers documented instances of the model generating factually incorrect scientific content. Meta removed the demo on November 17, 2022, after just three days of public availability.

Michael Black, Director of the Max Planck Institute for Intelligent Systems, warned the model could enable “deep scientific fakes.” Meta’s VP of AI Research Joelle Pineau later acknowledged the company “probably misjudged” public expectations for scientific AI.

ChatGPT launched 15 days after Galactica’s removal, followed by Meta’s Llama release approximately 100 days later. The Galactica experience directly informed Meta’s more cautious approach to subsequent model releases.

Galactica Infrastructure Requirements

The 120 billion parameter model required 128 NVIDIA A100 nodes with 80GB memory each for training. Meta deliberately constrained maximum model size to ensure single-node inference capability for broader research accessibility.

| Specification | Requirement |

|---|---|

| Training Hardware (120B) | 128 NVIDIA A100 80GB nodes |

| Inference Hardware (120B) | Single A100 node |

| Training Duration | 450 billion tokens |

| Memory Per Node | 80 GB maximum |

The metaseq library developed by Meta AI’s NextSys team powered training infrastructure. This same foundation later supported Llama model development.

Meta AI Investment After Galactica

Meta reported $39.2 billion in AI capital expenditures for 2024, with Q4 alone reaching $14.8 billion. The company projects spending between $66 billion and $72 billion in 2025, representing approximately 70% year-over-year growth.

CEO Mark Zuckerberg disclosed Meta expects to spend at least $600 billion on U.S. data centers and AI infrastructure by 2028. Meta AI now serves over 700 million monthly active users with projections suggesting 1 billion users within 2025.

Galactica Legacy and Open Source Availability

All six Galactica model variants remain available on GitHub and HuggingFace. Meta’s VP of AI Research confirmed ongoing requests from researchers seeking access to the models years after the original release.

The Llama 3.1 model has recorded 5.8 million HuggingFace downloads, demonstrating the success of Meta’s revised release strategy informed by Galactica’s reception. Meta’s generative AI tools now attract more than 4 million advertisers.

FAQ

How many parameters does Galactica have?

Galactica ranges from 125 million to 120 billion parameters across six model variants. The largest 120 billion parameter version delivers maximum performance for complex scientific reasoning tasks.

Why was Galactica removed after three days?

Meta removed Galactica after researchers documented instances of factually incorrect scientific content and fictitious citations. The gap between public expectations and research reality proved too significant.

What data was Galactica trained on?

Galactica trained on 48 million scientific papers, 106 billion tokens, 360 million citations, 2 million code samples, and 8 million lecture notes curated in a corpus called NatureBook.

How does Galactica compare to GPT-3?

Galactica outperformed GPT-3 on LaTeX equation tasks by 19.2 percentage points, scoring 68.2% compared to GPT-3’s 49.0%. It also set new records on medical question-answering benchmarks.

Is Galactica still available to use?

All six Galactica model variants remain available as open-source downloads on GitHub and HuggingFace. The public demo was removed, but researchers can still access the model weights.