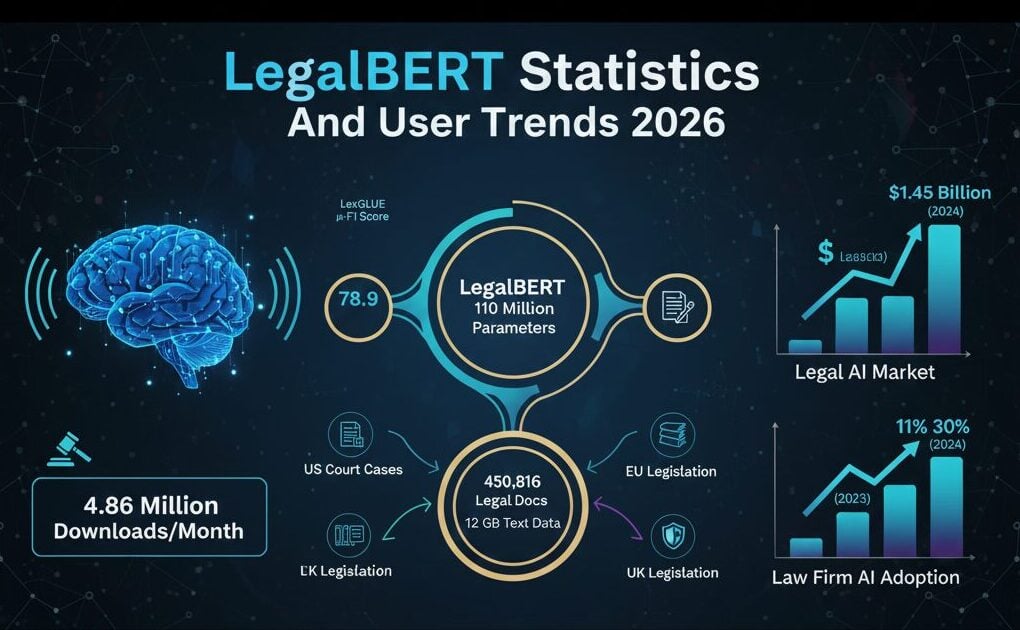

LegalBERT recorded 4.86 million downloads in December 2025, positioning it as the leading domain-specific language model for legal natural language processing. The model achieved a 78.9 μ-F1 harmonic mean score on the LexGLUE benchmark, outperforming general-purpose BERT by 2.2 points. Developed by Athens University of Economics and Business, LegalBERT operates on 110 million parameters trained across 450,816 legal documents spanning 12 GB of text.

This data compilation presents verified statistics on LegalBERT’s technical specifications, benchmark performance, adoption metrics, and market positioning within the expanding legal AI ecosystem.

LegalBERT Key Statistics

- LegalBERT processes 4.86 million monthly downloads as of December 2025, demonstrating widespread adoption in legal NLP applications.

- The model achieves 78.9 μ-F1 harmonic mean on LexGLUE benchmarks, marking a 2.2-point improvement over BERT-BASE across legal tasks.

- LegalBERT training incorporated 450,816 documents from six major legal sources, including 164,141 US court cases and 116,062 EU legislation pieces.

- The legal AI market reached USD 1.45 billion in 2024 and projects to USD 3.90 billion by 2030 at 17.3% CAGR.

- Law firm AI adoption tripled from 11% in 2023 to 30% in 2024, with large firms showing 39% implementation rates.

LegalBERT Model Architecture and Scale

LegalBERT follows BERT-BASE architecture with 110 million parameters optimized for legal language understanding. The model operates through 12 hidden layers with 768-dimensional hidden states and 12 attention heads.

The architecture supports a 32,000-token vocabulary developed using sentence-piece tokenization on legal text. Maximum sequence length reaches 512 tokens, trained over 1 million steps.

| Specification | LegalBERT-BASE | LegalBERT-SMALL |

|---|---|---|

| Total Parameters | 110 Million | 35 Million |

| Hidden Layers | 12 | 6 |

| Hidden Dimensionality | 768 | 512 |

| Attention Heads | 12 | 8 |

| Vocabulary Size | 32,000 tokens | 32,000 tokens |

LegalBERT-SMALL delivers four times faster inference speed while maintaining competitive performance. The 35-million parameter variant enables deployment in resource-constrained environments where processing speed determines operational viability.

LegalBERT Training Dataset Composition

The pre-training corpus comprises 12 GB of English legal text sourced from multiple jurisdictions. US court cases represent 36.4% of training data with 164,141 documents from the Case Law Access Project.

EU and UK legislation combined account for 39.5% of the corpus, including 116,062 EUR-Lex documents and 61,826 UK legislation pieces. Contract data from SEC EDGAR contributes 76,366 documents focused on commercial agreements.

| Source Category | Document Count | Percentage |

|---|---|---|

| US Court Cases | 164,141 | 36.4% |

| EU Legislation | 116,062 | 25.7% |

| US Contracts | 76,366 | 16.9% |

| UK Legislation | 61,826 | 13.7% |

| ECJ Cases | 19,867 | 4.4% |

| ECHR Cases | 12,554 | 2.8% |

European Court of Justice cases add 19,867 documents while European Court of Human Rights contributes 12,554 cases. This distribution provides cross-jurisdictional understanding weighted toward common law and EU regulatory frameworks.

LegalBERT Benchmark Performance Results

LegalBERT achieved 78.9 μ-F1 harmonic mean on the Legal General Language Understanding Evaluation benchmark. This score represents a 2.2-point advantage over BERT-BASE’s 76.7 μ-F1 across seven legal NLP tasks.

The model demonstrated strongest performance on SCOTUS classification with 76.4 μ-F1, marking an 8.1-point improvement over general-purpose BERT. CaseHOLD legal holding prediction showed 75.3 accuracy, outperforming BERT-BASE by 4.5 points.

LEDGAR contract provision classification reached 88.2 μ-F1 while UNFAIR-ToS terms of service analysis achieved 96.0 μ-F1. European Court of Human Rights Task B recorded 80.4 μ-F1 with 74.7 m-F1 on multi-label classification.

Model Comparison Across Averaging Methods

LegalBERT maintains performance leadership across arithmetic, harmonic, and geometric mean calculations. The model recorded 79.8 arithmetic mean μ-F1 compared to CaseLaw-BERT’s 79.4 and RoBERTa’s 79.4.

| Model | Arithmetic Mean | Harmonic Mean | Geometric Mean |

|---|---|---|---|

| LegalBERT | 79.8 / 72.0 | 78.9 / 70.8 | 79.3 / 71.4 |

| CaseLaw-BERT | 79.4 / 70.9 | 78.5 / 69.7 | 78.9 / 70.3 |

| RoBERTa Large | 79.4 / 70.8 | 78.4 / 69.1 | 78.9 / 70.0 |

| BERT-BASE | 77.8 / 69.5 | 76.7 / 68.2 | 77.2 / 68.8 |

Harmonic mean scoring addresses aggregation concerns by penalizing inconsistent performance. LegalBERT’s 70.8 m-F1 harmonic mean exceeds BERT-BASE by 2.6 points, validating consistent improvements across diverse legal NLP tasks.

LegalBERT Community Adoption Metrics

Hugging Face Hub recorded 4.86 million downloads for legal-bert-base-uncased in December 2025. The community created 80+ fine-tuned derivatives extending LegalBERT to specialized applications.

The model accumulated 287 community likes with 66 Spaces demonstrating active implementation. Nine model adapters enable parameter-efficient fine-tuning for specific legal domains.

Five official LegalBERT family variants address different deployment scenarios. The legal-bert-small-uncased variant prioritizes inference speed while domain-specific models target contract analysis, EU regulation, and human rights law.

Legal AI Market Growth Driving LegalBERT Implementation

The global legal AI market reached USD 1.45 billion in 2024, according to Grand View Research. Projections indicate USD 3.90 billion by 2030, representing 17.3% compound annual growth rate.

Natural language processing technology shows 17.0% projected CAGR through 2030. Machine learning and deep learning segments captured 63% market share in 2024, supporting demand for specialized legal models.

North America holds 46.2% market share while Asia Pacific demonstrates 20.0% growth rate. The NLP segment expansion directly supports LegalBERT adoption for contract analysis, legal research, and document review automation.

Law Firm AI Implementation Trends

Law firm AI adoption reached 30% in 2024, up from 11% in 2023, according to American Bar Association data. This represents near-tripling of implementation rates within one year.

Large firms with 51+ lawyers showed 39% AI adoption rates compared to 20% among firms with 50 or fewer lawyers. Individual lawyer usage increased from 27% to 31% while firm-wide implementation declined from 24% to 21%.

| Metric | 2023 | 2024 | Change |

|---|---|---|---|

| Overall AI Adoption | 11% | 30% | +19 points |

| Large Firms (51+ lawyers) | – | 39% | – |

| Small/Mid Firms (≤50) | – | 20% | – |

| Individual Usage | 27% | 31% | +4 points |

Large firms demonstrate 95% higher adoption rates than smaller counterparts, indicating concentrated enterprise demand for domain-optimized models in high-volume legal operations.

LegalBERT Variant Specialization

The LegalBERT family includes domain-specific variants trained on subset corpora. The bert-base-uncased-contracts variant focuses on 76,366 US contracts for commercial agreement analysis.

The bert-base-uncased-eurlex variant targets EU regulatory compliance using European legislation. The bert-base-uncased-echr variant specializes in human rights law with ECHR case training data.

The ECHR variant achieves 99%+ confidence on masked token prediction for torture-related terminology. This demonstrates exceptional domain calibration for human rights case analysis requiring precise legal language understanding.

FAQ

How many downloads does LegalBERT receive monthly?

LegalBERT recorded 4.86 million downloads in December 2025 on Hugging Face Hub, positioning it among the most-utilized domain-specific transformer models for legal natural language processing applications.

What performance advantage does LegalBERT have over BERT?

LegalBERT achieves 78.9 μ-F1 harmonic mean on LexGLUE benchmarks, outperforming BERT-BASE by 2.2 points. The model shows strongest gains on SCOTUS classification with an 8.1-point improvement over general-purpose BERT.

How many documents were used to train LegalBERT?

LegalBERT training incorporated 450,816 documents across 12 GB of English legal text. The corpus includes 164,141 US court cases, 116,062 EU legislation pieces, 76,366 US contracts, and documents from UK legislation and European courts.

What is the legal AI market size in 2024?

The global legal AI market reached USD 1.45 billion in 2024 with projections indicating USD 3.90 billion by 2030. The natural language processing segment shows 17.0% projected CAGR, supporting increased demand for specialized legal models.

How many parameters does LegalBERT contain?

LegalBERT-BASE operates on 110 million parameters across 12 hidden layers with 768-dimensional hidden states. LegalBERT-SMALL contains 35 million parameters, delivering four times faster inference speed for resource-constrained deployment scenarios.