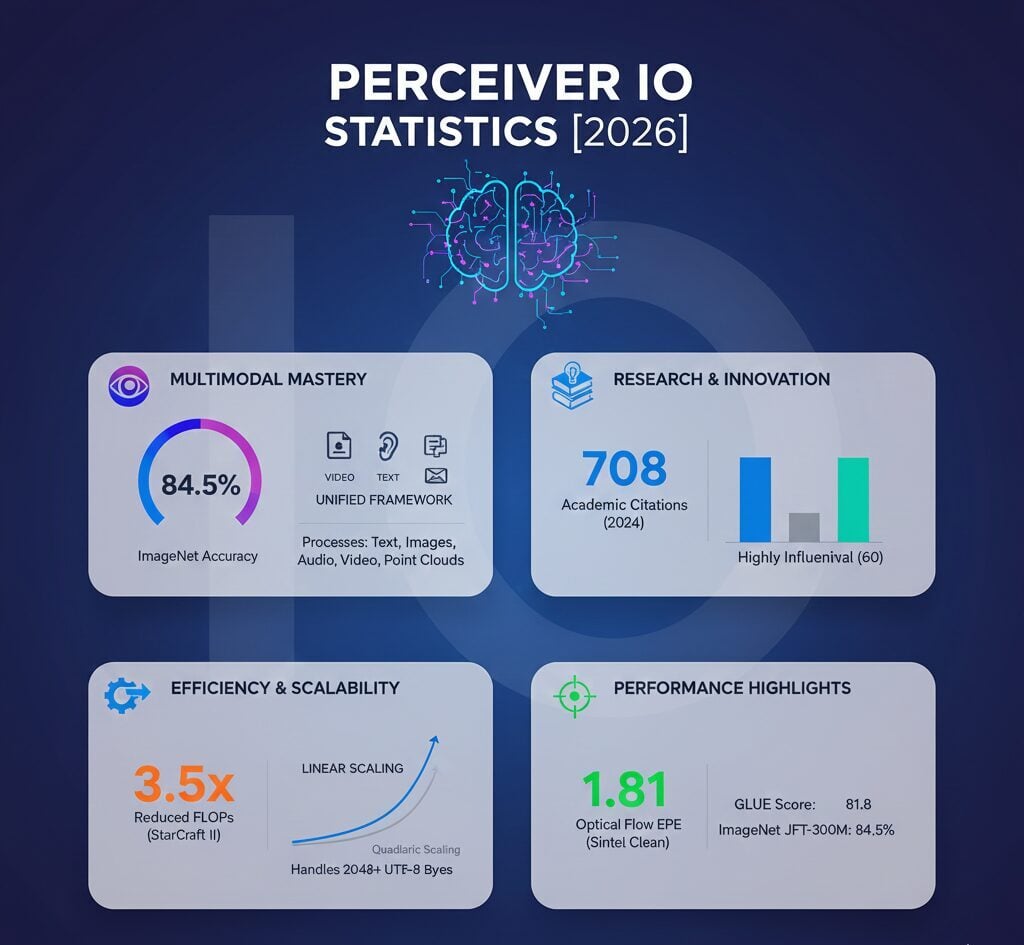

Perceiver IO achieved 708 academic citations by 2024 and established state-of-the-art results on optical flow benchmarks with an end-point error of 1.81 on Sintel Clean. DeepMind’s multimodal architecture processes text, images, audio, video, and point clouds through a unified framework that scales linearly with input size. The model reached 84.5% ImageNet accuracy with JFT pretraining while reducing computational requirements by 3.5x in StarCraft II gaming applications.

Published in July 2021 and presented at ICLR 2022, Perceiver IO distinguishes itself from standard transformers by eliminating quadratic complexity through cross-attention projection into fixed-size latent space. The architecture handles sequences up to 2,048 UTF-8 bytes compared to typical transformer limits of 512 subword tokens.

Perceiver IO Key Statistics

- Perceiver IO accumulated 708 total academic citations as of 2024, with 60 classified as highly influential citations that significantly build upon core concepts

- The architecture achieved 81.8 GLUE benchmark score using multitask queries, matching BERT Base performance while processing raw UTF-8 bytes without tokenization

- Perceiver IO reached 84.5% top-1 accuracy on ImageNet classification when using conv+maxpool preprocessing with JFT-300M pretraining

- The model contains 201 million parameters for language tasks and 212 million parameters for vision applications, utilizing 256-512 configurable latent variables

- When integrated into AlphaStar, Perceiver IO achieved a 3.5x reduction in FLOPs while maintaining 87% win rate with only three experimental runs required

Perceiver IO Research Impact and Citation Analysis

The research paper garnered significant attention across computer vision, natural language processing, and multimodal learning domains. Semantic Scholar data shows 208 papers adopted the methodology directly for their experiments.

The architecture’s ability to handle arbitrary inputs and outputs without task-specific modifications influenced the development of unified vision-language models. Background citations numbered 219, indicating widespread contextual reference in related research.

| Citation Type | Count | Percentage |

|---|---|---|

| Methods Citations | 208 | 29.4% |

| Background Citations | 219 | 30.9% |

| Highly Influential | 60 | 8.5% |

| Other Citations | 221 | 31.2% |

Perceiver IO Language Understanding Performance

The model demonstrated strong results on GLUE benchmark tasks while operating on different input representations. The multitask queries configuration achieved the highest score at 81.8.

Processing raw UTF-8 bytes without vocabulary-based tokenization yielded 81.0 GLUE score, showing minimal performance degradation. This approach eliminates preprocessing requirements and handles any language or character set.

The architecture utilized 26 processing layers compared to BERT’s 12 layers, demonstrating that increased depth with reduced latent size maintains competitive performance. The model processes sequences up to 2,048 bytes versus standard 512 token limits.

Google’s DeepMind researchers emphasized that linear computational scaling enables deeper architectures within identical resource budgets.

Perceiver IO Image Classification Benchmarks

ImageNet results varied significantly based on preprocessing approaches and pretraining datasets. The JFT-300M pretrained variant achieved the highest accuracy at 84.5%.

The learned position variant reached 72.7% accuracy without any 2D architectural assumptions, representing the best result by any model on ImageNet without structural or feature information about spatial relationships.

| Model Configuration | Top-1 Accuracy | Pretraining Dataset |

|---|---|---|

| Conv+MaxPool + JFT | 84.5% | JFT-300M |

| Conv Processing | 82.1% | ImageNet |

| ResNet-50 Baseline | 78.6% | ImageNet |

| Learned Position Only | 72.7% | ImageNet |

Perceiver IO Architecture and Computational Efficiency

The bottleneck design employs cross-attention to project high-dimensional inputs into fixed-size latent space, followed by self-attention among latent variables. This eliminates quadratic scaling associated with standard transformer self-attention on inputs.

The language model contained 201 million parameters while the vision model utilized 212 million parameters with 176 billion FLOPs. The UTF-8 vocabulary size of 262 tokens comprises 256 byte IDs plus six special tokens.

Latent variables numbered between 256-512 based on configuration, enabling linear computational scaling with input and output sizes. Traditional transformers exhibit quadratic complexity as sequence length increases.

Perceiver IO Optical Flow and Gaming Applications

The architecture achieved state-of-the-art optical flow results at publication time without explicit mechanisms for multiscale correspondence. End-point error measured the Euclidean distance between predicted and ground-truth flow.

| Benchmark | Perceiver IO EPE | PWCNet EPE | RAFT EPE |

|---|---|---|---|

| Sintel Clean | 1.81 | 2.45 | 1.94 |

| Sintel Final | 2.42 | 3.39 | 2.71 |

| KITTI | 4.98 | 5.41 | 5.27 |

Training utilized AutoFlow synthetic dataset containing 400,000 annotated image pairs at 368×496 resolution. The model outperformed specialized optical flow architectures despite lacking cost volumes, explicit warping, or hierarchical processing.

When integrated into AlphaStar gaming system, Perceiver IO replaced the entity Transformer encoder as a drop-in component. The modification maintained 87% win rate while achieving 3.5x FLOPs reduction.

Perceiver IO Multimodal Learning Capabilities

The architecture demonstrated strong multimodal autoencoding by simultaneously processing video frames, audio samples, and classification labels. Experiments used 16 frames at 224×224 resolution preprocessed into 50,000 patches.

Audio processing handled 30,720 raw samples organized into 1,920 vectors of 16 dimensions. Classification tasks covered 700 classes from the Kinetics-700-2020 dataset.

By masking class labels during evaluation, the autoencoder functioned as a video classifier, achieving 45% top-1 accuracy while maintaining 20.7 PSNR for video reconstruction. The model learned joint distributions across three distinct modalities within shared latent space.

Graph Perceiver IO extended the architecture to handle graph-structured datasets by incorporating graph positional encoding. Published in Pattern Recognition journal in 2024, the extension outperformed representative graph neural network baselines.

FAQ

What GLUE benchmark score does Perceiver IO achieve?

Perceiver IO achieves an average GLUE score of 81.8 using multitask queries, 81.2 with SentencePiece tokenization, and 81.0 processing raw UTF-8 bytes. These scores match the BERT Base baseline of 81.1 while using 26 processing layers compared to BERT’s 12 layers.

How many citations has Perceiver IO received?

The Perceiver IO paper accumulated 708 academic citations as of 2024, with 60 classified as highly influential citations. Additionally, 208 citations specifically adopted the Perceiver IO methodology in their research implementations.

What is Perceiver IO’s ImageNet accuracy?

Perceiver IO achieves 84.5% top-1 accuracy on ImageNet with conv+maxpool preprocessing and JFT-300M pretraining, 82.1% with standard convolutional processing, and 72.7% using only learned position embeddings without 2D structural information.

How many parameters does the Perceiver IO language model contain?

The Perceiver IO language model contains approximately 201 million parameters, processing sequences up to 2,048 UTF-8 bytes. The model employs 256 latent variables with 26 processing layers and a vocabulary size of 262 tokens.

What computational efficiency does Perceiver IO provide?

Perceiver IO achieves 3.5x reduction in FLOPs when integrated into AlphaStar while maintaining 87% win rate. The architecture scales linearly with input and output sizes, eliminating the quadratic complexity of standard transformer self-attention mechanisms.