Key SciBERT Statistics

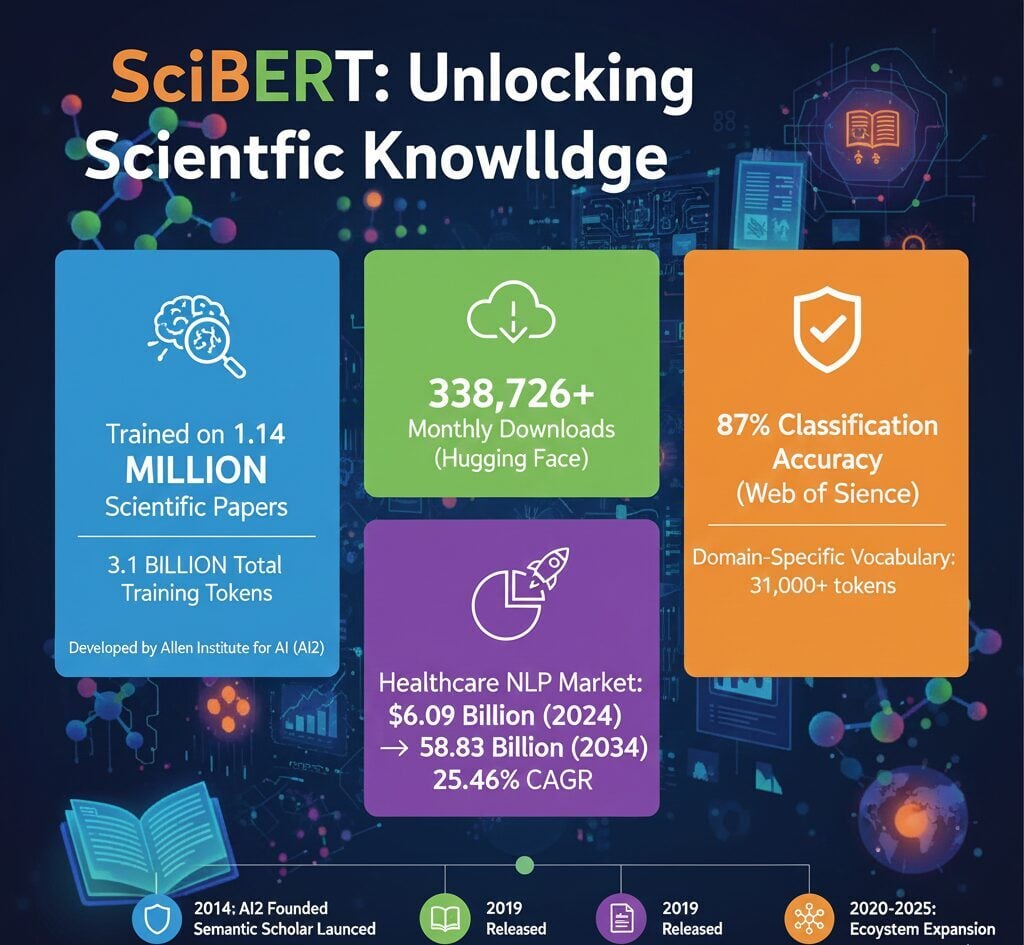

SciBERT is a domain-specific language model developed by the Allen Institute for AI (AI2), trained on 1.14 million scientific papers from Semantic Scholar. Released in November 2019 at EMNLP, it addresses the challenge of processing scientific terminology that general-purpose models struggle with. The model uses a specialized vocabulary of approximately 31,000 tokens optimized for biomedical and computer science texts.

AI2 operates as a non-profit research institute founded by Microsoft co-founder Paul Allen in 2014. SciBERT remains freely available through Hugging Face and GitHub, serving researchers and enterprises working with scientific literature worldwide.

SciBERT History

The development of SciBERT emerged from AI2’s broader mission to advance scientific research through artificial intelligence. Researchers recognized that general-purpose language models like BERT fragmented scientific terminology into meaningless subword tokens, limiting their effectiveness for biomedical text mining.

The team at AI2 built SciBERT using full-text papers rather than abstracts alone. This approach captured contextual patterns across methodology sections, results discussions, and conclusions that abstract-only training would miss.

SciBERT Creators

Three researchers at the Allen Institute for AI developed SciBERT as part of AI2’s natural language processing research program. Their work addressed a critical gap in scientific text processing capabilities.

Scientific NLP Market Size

The healthcare NLP market reached $6.09 billion in 2024, with projections indicating growth to $58.83 billion by 2034. This represents a compound annual growth rate of 25.46% over the next decade. North America holds 41.7% of the global market share, driven by electronic health record implementation exceeding 96% among US hospitals.

The broader global NLP market stood at $29.71 billion in 2024 and analysts project it will reach $158.04 billion by 2032. This growth creates sustained demand for domain-specific language models like SciBERT that accurately process scientific terminology.

Pharmaceutical companies increasingly adopt NLP for literature mining, with 45% already using these technologies for drug discovery and research. Over 50% of biotech firms deploy AI-driven NLP tools for processing clinical data. The convergence of 71% enterprise generative AI adoption with pharmaceutical NLP utilization drives expanding demand for specialized models.

SciBERT Competitors

SciBERT competes within a growing ecosystem of domain-specific BERT variants. Each model targets different aspects of scientific and biomedical text processing. BioBERT focuses specifically on biomedical literature, while PubMedBERT trains exclusively on PubMed abstracts for clinical applications.

The competitive landscape includes models from major research institutions and technology companies developing their own domain-adapted transformers. Some organizations like IBM have invested in specialized NLP systems for healthcare applications.

| Model | Developer | Primary Domain | Training Corpus |

|---|---|---|---|

| BioBERT | Korea University / DMIS Lab | Biomedical | PubMed abstracts + PMC full texts |

| PubMedBERT | Microsoft Research | Biomedical/Clinical | PubMed abstracts only |

| BlueBERT | NIH/NCBI | Biomedical/Clinical | PubMed + MIMIC-III clinical notes |

| ClinicalBERT | MIT | Clinical | MIMIC-III clinical notes |

| MatSciBERT | IIT Delhi | Materials Science | Materials science papers |

| ChemBERT | Various Research Groups | Chemistry | Chemistry literature |

| BioLinkBERT | Stanford | Biomedical | PubMed with citation links |

| ScholarBERT | Various | Academic/General Science | Multi-domain academic papers |

| BERT-base | General Purpose | Wikipedia + BooksCorpus | |

| RoBERTa | Meta AI | General Purpose | Expanded web corpus |

SciBERT’s advantage lies in its multi-domain scientific training covering both biomedical (82%) and computer science (18%) papers. This broader coverage enables better generalization across scientific disciplines compared to narrowly focused alternatives. The model’s 50+ active Hugging Face Spaces demonstrate production deployment across diverse scientific domains.

SciBERT Performance Benchmarks

SciBERT achieves 87% accuracy on the Web of Science text classification benchmark, representing a 3-percentage-point improvement over base BERT. Named entity recognition performance varies by domain, with F1-scores reaching 94.30% on chemical entity recognition (BC4CHEMD) and 89.64% on materials science tasks.

For clinical trial eligibility criteria extraction, SciBERT achieves F1-scores between 0.622 and 0.715. Highly specialized alternatives like PubMedBERT can outperform SciBERT on purely clinical datasets, reaching F1-scores of 0.715-0.836 for specific clinical NER tasks. The vocabulary optimization approach has influenced subsequent domain-specific variants including technology sector applications in semiconductor research.

Semantic Scholar Infrastructure

SciBERT’s training data originates from Semantic Scholar, the AI-powered academic search engine developed by AI2. The platform now indexes over 214 million papers, a substantial expansion from 45 million papers in 2017. This growth supports continued SciBERT fine-tuning and application development.

The S2ORC dataset provides access to 12 million full-text papers, while approximately 60 million papers include AI-generated TLDR summaries. The Ai2 Asta research database contains over 108 million abstracts. Companies like Amazon and other cloud providers offer infrastructure supporting large-scale deployment of models trained on these datasets.

FAQs

What is SciBERT used for?

SciBERT processes scientific text for tasks including biomedical named entity recognition, research paper classification, clinical document analysis, and scientific literature mining. Organizations use it to extract information from medical records and research papers.

Is SciBERT free to use?

Yes. AI2 released SciBERT as open-source software under the Apache 2.0 license. You can download pretrained weights from Hugging Face and GitHub at no cost for research or commercial applications.

How does SciBERT differ from BERT?

SciBERT uses a specialized vocabulary preserving scientific terms as single tokens instead of fragmenting them. It trains on 1.14 million scientific papers rather than Wikipedia, improving performance on biomedical and technical text.

Who developed SciBERT?

Researchers Iz Beltagy, Kyle Lo, and Arman Cohan at the Allen Institute for AI (AI2) developed SciBERT. They released the model at the EMNLP conference in November 2019.

What accuracy does SciBERT achieve?

SciBERT achieves 87% accuracy on scientific text classification and F1-scores up to 94.30% on chemical named entity recognition. Performance varies by specific task and domain application.