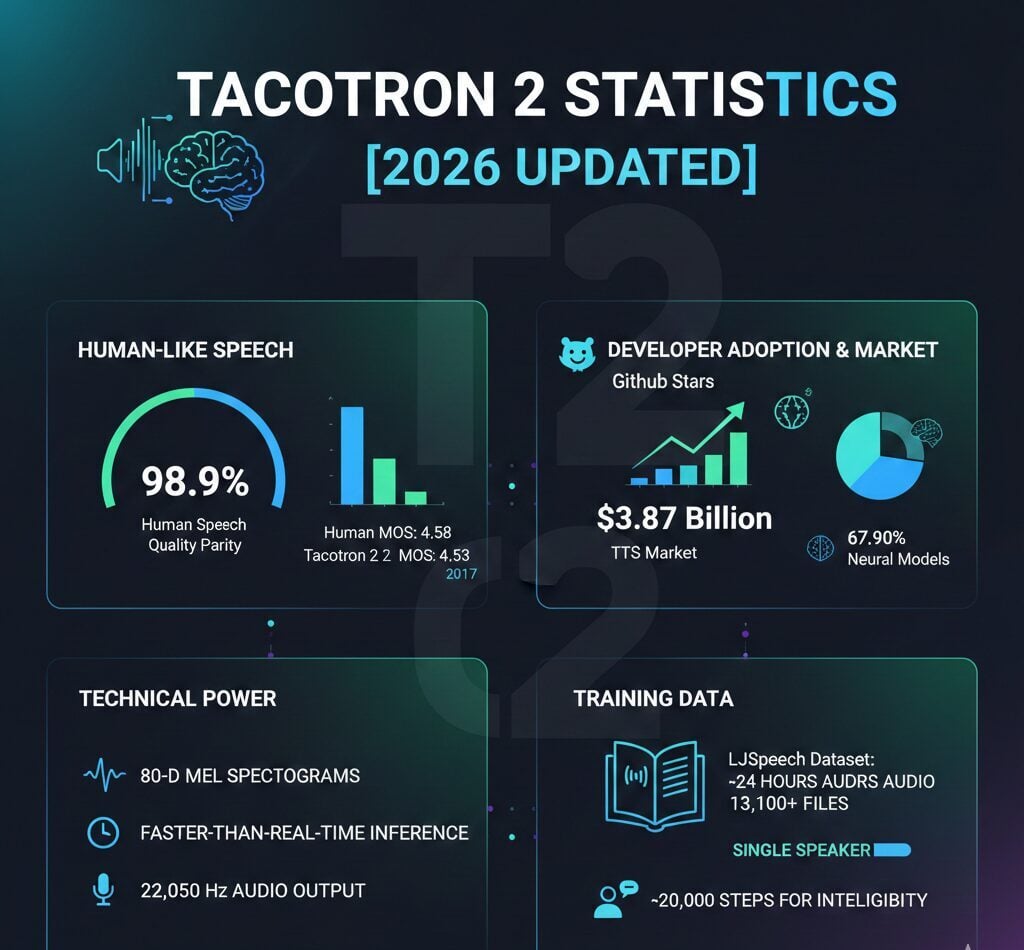

Tacotron 2 achieved a Mean Opinion Score of 4.53 in 2017, reaching 98.9% human speech quality parity and transforming neural text-to-speech technology. Google’s architecture maintains over 5,300 GitHub stars as of December 2024, demonstrating sustained developer adoption across the $3.87 billion TTS market where neural models command 67.90% market share.

Tacotron 2 Key Statistics

- Tacotron 2 recorded a Mean Opinion Score of 4.53 compared to 4.58 for human speech, as reported in the original 2017 arXiv paper.

- The NVIDIA PyTorch implementation reached 5,300+ GitHub stars and 1,400+ forks as of December 2024.

- Neural TTS models account for 67.90% of the global text-to-speech market valued at $3.87-$4.15 billion in 2024.

- Tacotron 2 processes 80-dimensional mel spectrograms at 22,050 Hz with faster-than-real-time inference speed.

- The LJSpeech training dataset contains 24 hours of audio across 13,100 files from a single speaker.

Tacotron 2 Performance Metrics

The Mean Opinion Score measures speech naturalness on a scale where higher values indicate better quality. Tacotron 2 achieved groundbreaking results in December 2017, positioning synthetic speech within 1.1% of professionally recorded human audio.

Google Research published these findings in arXiv paper 1712.05884, presented at IEEE ICASSP 2018. The model maintained strong performance in low-resource environments with scores of 4.25 ± 0.17, outperforming Deep Voice 3 and FastSpeech 2 in naturalness evaluations conducted through 2024.

| Metric | Tacotron 2 Score | Human Benchmark |

|---|---|---|

| Mean Opinion Score (MOS) | 4.53 | 4.58 |

| Low-Resource MOS (95% CI) | 4.25 ± 0.17 | Ground Truth |

| Custom Test Set MOS | 4.354 | 100-sentence set |

Tacotron 2 GitHub Repository Activity

NVIDIA’s official PyTorch implementation serves as the primary reference for developers implementing text-to-speech systems. The repository demonstrates sustained community engagement through December 2024.

The 5,300+ stars position the repository among the top TTS implementations on GitHub. The 1,400+ forks indicate developers adapted the codebase for custom voice synthesis projects across multiple languages and domains, with 8 contributors maintaining 134 total commits.

| GitHub Metric | Current Count |

|---|---|

| Stars | 5,300+ |

| Forks | 1,400+ |

| Watchers | 113 |

| Contributors | 8 |

| Open Issues | 193 |

Tacotron 2 Technical Architecture

The model’s technical specifications explain its breakthrough performance in neural speech synthesis. The architecture processes audio through 80-dimensional mel spectrograms computed at 12.5 millisecond intervals.

Three encoder convolutional layers with 512 filters process input sequences. Post-net convolutional filters maintain 512 filters in a 5×1 shape configuration. The system outputs audio at 22,050 Hz, with WaveNet vocoding producing final 24 kHz resolution.

| Technical Parameter | Specification |

|---|---|

| Mel Spectrogram Dimensions | 80-dimensional |

| Frame Computation Interval | 12.5 milliseconds |

| Output Sample Rate | 22,050 Hz |

| WaveNet Output Resolution | 24 kHz |

| Encoder Convolutional Layers | 3 layers (512 filters) |

| Post-net Convolutional Filters | 512 filters (5×1 shape) |

NVIDIA’s implementation achieves faster-than-real-time inference, enabling practical deployment in production environments. The 80-dimensional mel spectrogram approach proved more efficient than traditional linguistic features while maintaining superior audio quality.

Text-to-Speech Market Analysis

The global TTS market reached $3.87-$4.15 billion in 2024, according to reports from Mordor Intelligence and Expert Market Research. Neural models pioneered by architectures such as Tacotron 2 dominate current deployments.

Cloud TTS solutions account for 63.80% of implementations, with software components representing 76.30% of market activity. North America maintains 37.20% market share, while the sector projects 12.89%-15.7% CAGR growth through 2030.

Customer service IVR systems represent 31.30% of TTS applications. The automotive and transportation sectors show 14.80% CAGR growth through 2030, driven by voice assistant integration and navigation systems.

Tacotron 2 Training Data Requirements

The LJSpeech dataset provides standardized training data for Tacotron 2 implementations. The corpus contains 24 hours of audio from a single female speaker recorded in 16-bit PCM WAV format at mono configuration.

Research indicates speech becomes intelligible around 20,000 training steps when training from scratch. Transfer learning techniques achieve 90% adoption among developers, reducing training requirements to under 3 hours of speaker-specific data in low-resource environments.

| Dataset Parameter | Value |

|---|---|

| Total Audio Duration | ~24 hours |

| Number of Audio Files | 13,100 files |

| Speaker Configuration | Single female speaker |

| Vocabulary Size | 29 characters |

| Training Steps for Intelligibility | ~20,000 steps |

Tacotron 2 vs Competing Models

Comparative analysis reveals performance trade-offs between naturalness and inference speed across neural TTS architectures. Tacotron 2 maintains superior Mean Opinion Scores despite slower autoregressive generation.

FastSpeech achieves 270× faster mel-spectrogram generation through parallel processing but records lower MOS scores around 4.0. Deep Voice 3 offers 10× faster training through fully convolutional architecture while maintaining scores below Tacotron 2’s 4.53 benchmark.

Production systems implement hybrid approaches to balance audio quality with inference speed requirements. The trade-off between naturalness and processing efficiency continues driving architecture innovation in neural TTS development.

FAQ

What Mean Opinion Score did Tacotron 2 achieve?

Tacotron 2 achieved a Mean Opinion Score of 4.53, compared to 4.58 for human speech. This represents 98.9% human speech quality parity according to the original 2017 arXiv paper from Google Research.

How many GitHub stars does the Tacotron 2 repository have?

The NVIDIA PyTorch implementation of Tacotron 2 has over 5,300 stars and 1,400+ forks as of December 2024, making it one of the most popular TTS repositories.

What is the current size of the text-to-speech market?

The global TTS market reached $3.87-$4.15 billion in 2024, with neural models accounting for 67.90% market share. The sector projects 12.89%-15.7% CAGR growth through 2030.

What sample rate does Tacotron 2 use?

Tacotron 2 outputs audio at 22,050 Hz sample rate, with WaveNet vocoding producing final 24 kHz resolution. The model processes 80-dimensional mel spectrograms computed at 12.5 millisecond intervals.

How much training data does Tacotron 2 require?

The LJSpeech training dataset contains 24 hours of audio across 13,100 files. Speech becomes intelligible around 20,000 training steps, though transfer learning reduces requirements to under 3 hours of speaker data.