Key Stats

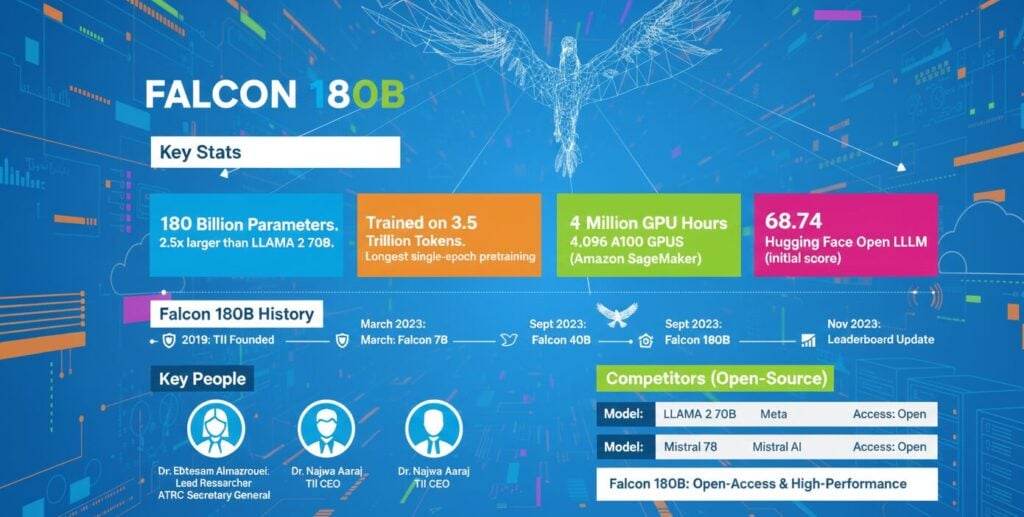

Falcon 180B represents a substantial advancement in open-access artificial intelligence development by the Technology Innovation Institute in Abu Dhabi. This model reached 180 billion parameters trained on 3.5 trillion tokens in 2024, positioning it among the most capable openly available language models.

The model’s performance typically positions between GPT-3.5 and GPT-4 across various evaluation benchmarks. This positioning makes it competitive with commercial alternatives while maintaining open-access availability for both research and commercial purposes.

TII released Falcon 180B under a modified Apache 2.0 license, allowing developers worldwide to deploy, modify, and build applications using the model. The release strengthened the UAE’s position in the global artificial intelligence landscape.

Falcon 180B History

Technology Innovation Institute founded in Abu Dhabi as the applied research pillar of the Advanced Technology Research Council.

TII trained Falcon 7B with 1,500 billion tokens on 384 A100 40GB GPUs. Training completed in approximately two weeks using the RefinedWeb dataset.

Falcon 40B released and quickly ascended to the top of the Hugging Face Leaderboard for open-source large language models. The model was trained on 1 trillion tokens.

TII announced Falcon 180B, the largest openly available language model at the time. The model achieved state-of-the-art results across natural language processing benchmarks.

Hugging Face Open LLM Leaderboard updated methodology with two additional benchmarks, resulting in a recalculated score of 67.85 for Falcon 180B.

TII continued development with newer Falcon model iterations, including Falcon Mamba 7B and Falcon 2 series, building upon the 180B foundation.

Technology Innovation Institute Leadership

Executive Director and Chief AI Researcher who led the development of Falcon 7B, 40B, and 180B models. She established the AI Cross-Center Unit at TII and directed strategic planning for large language model development.

Second CEO of Technology Innovation Institute, overseeing the institute’s applied research programs and advancing the organization’s mission in cutting-edge technology development across multiple research centers.

Secretary General of the Advanced Technology Research Council, emphasizing democratization of artificial intelligence access and commitment to open-source community collaboration in technology advancement.

Technology Innovation Institute Acquisitions

Technology Innovation Institute operates as a government-funded applied research center under Abu Dhabi’s Advanced Technology Research Council, pursuing strategic partnerships rather than traditional corporate acquisitions. The institute focuses on collaborative research agreements with leading technology companies and academic institutions worldwide.

TII established partnerships with Amazon Web Services for cloud computing infrastructure, providing access to thousands of A100 GPUs for training large language models. This collaboration enabled the development of Falcon 180B through Amazon SageMaker’s distributed training capabilities. The partnership demonstrates how government research institutions leverage commercial cloud infrastructure for advanced artificial intelligence development.

The institute forged relationships with Hugging Face for model distribution and community engagement, making Falcon models accessible to millions of developers globally. This partnership positioned Falcon 180B on the Hugging Face Model Hub alongside other leading open-source language models. Research collaborations with international universities and technology organizations extend TII’s capabilities beyond internal resources. These partnerships focus on advancing quantum computing, cryptography, autonomous systems, and artificial intelligence research rather than pursuing equity acquisitions or commercial mergers typical of private technology companies.

Technology Innovation Institute Market Cap

Technology Innovation Institute operates as a non-profit government research entity rather than a publicly traded corporation, making traditional market capitalization metrics inapplicable. The institute receives funding from Abu Dhabi’s Advanced Technology Research Council as part of the emirate’s strategic investment in advanced technology research and development.

The UAE government’s commitment to artificial intelligence research positions TII as a strategic national asset rather than a profit-driven enterprise. This funding model enables long-term research investments without quarterly earnings pressures that constrain publicly traded technology companies. Government support allows TII to pursue ambitious projects like Falcon 180B that require substantial computational resources and multi-year development timelines.

Falcon 180B Revenue Model

Falcon 180B operates under an open-access model rather than a traditional revenue-generating product structure. TII released the model under the Falcon 180B TII License, based on Apache 2.0, allowing researchers and commercial users to deploy the technology without royalty payments for most use cases.

The development costs for Falcon 180B were substantial, with training consuming approximately 7 million GPU hours on Amazon SageMaker infrastructure. Organizations seeking to deploy similar models face monthly operational costs exceeding $20,000 for continuous cloud infrastructure, creating opportunities for TII to provide consulting and implementation services.

The open-access strategy positions TII as a key contributor to the global artificial intelligence ecosystem rather than pursuing direct monetization. This approach aligns with the UAE’s National AI Strategy 2031, focusing on knowledge economy development and international collaboration in advanced technology research. Amazon Web Services provided the cloud infrastructure for training, demonstrating the commercial partnerships supporting the project.

Falcon 180B Competitors

| Model/Company | Parameters | Developer | Access Type | Key Strength |

|---|---|---|---|---|

| LLaMA 2 70B | 70 billion | Meta Platforms | Open-source | Balanced performance and efficiency |

| GPT-3.5 | 175 billion | OpenAI | API access | Conversational capabilities |

| GPT-4 | Undisclosed | OpenAI | API access | Advanced reasoning and multimodal |

| PaLM 2-Large | 340 billion | Proprietary | Multilingual understanding | |

| Claude 2 | Undisclosed | Anthropic | API access | Long context windows |

| Mistral 7B | 7.3 billion | Mistral AI | Open-source | Efficiency in smaller form |

| BLOOM | 176 billion | BigScience | Open-source | Multilingual capabilities |

| Falcon 40B | 40 billion | TII | Open-source | Predecessor with strong performance |

| MPT-30B | 30 billion | MosaicML | Open-source | Commercial deployment focus |

| Yi-34B | 34 billion | 01.AI | Open-source | Bilingual Chinese-English |

Falcon 180B competes in the large language model market by offering open-access availability combined with performance approaching proprietary systems. The model outperforms LLaMA 2 70B and GPT-3.5 on multiple benchmarks while achieving parity with PaLM 2-Large on specific tasks.

Hardware manufacturers like NVIDIA play a critical role in the competitive landscape, as their A100 GPUs powered the training infrastructure for Falcon 180B. The model requires substantial computational resources for deployment, with inference needing approximately 640 GB of memory for FP16 quantization or 320 GB with int4 quantization.

The competitive advantage lies in the open-access licensing model, allowing organizations to deploy the technology on private infrastructure. This contrasts with API-based competitors where data passes through third-party servers. Research institutions and enterprises seeking data sovereignty benefit from this approach, though operational complexity remains higher than managed API services.

Falcon 180B Hardware Requirements

Running Falcon 180B requires substantial memory resources that create significant deployment barriers for most organizations. The model’s size necessitates specialized hardware configurations that exceed typical enterprise computing capabilities.

Research by Hugging Face scientist Clémentine Fourrier demonstrated no measurable difference in inference quality between 4-bit and bfloat-16 quantization. This finding enables a 75% reduction in memory requirements without performance degradation, making deployment more accessible for organizations with limited GPU resources.

The hardware requirements position Falcon 180B as an enterprise-grade solution rather than a consumer-accessible model. Organizations must balance the benefits of open-access deployment against the substantial infrastructure investments required for production use.

Falcon 180B Training Infrastructure

Training Falcon 180B consumed approximately 7 million GPU hours, utilizing up to 4,096 A100 40GB GPUs operating simultaneously during large-scale pretraining phases. The training process leveraged Amazon SageMaker infrastructure with 3D parallelism strategies combining tensor parallelism, pipeline parallelism, and data parallelism with ZeRO optimization.

The computational requirements translate to approximately 800 years of continuous single-GPU computation, illustrating the massive parallel computing infrastructure necessary for modern large language models. This scale required 4 times more computational power than LLaMA 2’s training process.

TII developed a custom distributed training codebase called Gigatron that enabled efficient scaling across thousands of GPUs. The system utilized custom Triton kernels for optimization and incorporated FlashAttention mechanisms to improve training efficiency.

Falcon 180B Benchmark Performance

Falcon 180B achieved a score of 68.74 on the Hugging Face Open LLM Leaderboard at release, representing the highest score among open-access models at that time. The Hugging Face Open LLM Leaderboard added two additional benchmarks in November 2024, resulting in a recalculated score of 67.85.

Performance comparisons showed the model performing on par with PaLM 2-Large across multiple evaluation benchmarks. The model demonstrated superior performance to GPT-3.5 on MMLU while achieving competitive results on tasks including HellaSwag, LAMBADA, WebQuestions, and Winogrande.

The updated methodology placed Falcon 180B on par with LLaMA 2 70B according to revised evaluation criteria. This positioning demonstrates the model’s competitiveness despite utilizing different architectural approaches and training methodologies compared to Meta’s flagship open-source offering.

FAQs

What makes Falcon 180B different from other language models?

Falcon 180B offers 180 billion parameters with open-access availability, trained on 3.5 trillion tokens. It outperforms GPT-3.5 and LLaMA 2 70B on multiple benchmarks while maintaining Apache-based licensing.

How much does it cost to run Falcon 180B?

Running Falcon 180B requires 640 GB memory for FP16 or 320 GB with int4 quantization, typically needing eight A100 GPUs. Continuous cloud deployment costs exceed $20,000 monthly.

Can I use Falcon 180B for commercial applications?

Yes, Falcon 180B is available under the Falcon 180B TII License based on Apache 2.0, allowing commercial use with certain restrictions. Additional permissions required for hosted services.

Who developed Falcon 180B and when was it released?

Technology Innovation Institute in Abu Dhabi developed Falcon 180B under Dr. Ebtesam Almazrouei’s leadership. The model was released on September 6, 2023, following earlier Falcon versions.

How does Falcon 180B compare to GPT-4?

Falcon 180B typically performs between GPT-3.5 and GPT-4 depending on specific benchmarks. It matches PaLM 2-Large performance while being half that model’s size, demonstrating efficient training.