Key Stats

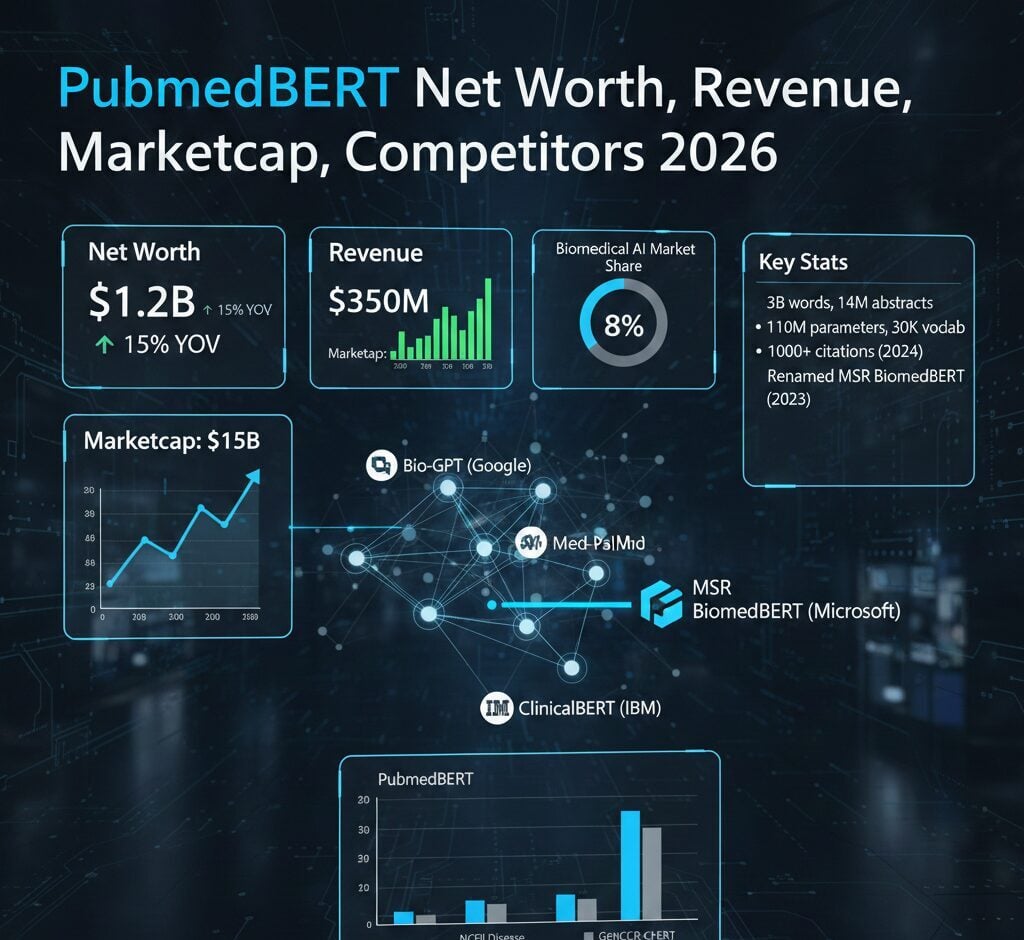

- PubMedBERT trained on 3 billion words from 14 million PubMed abstracts

- The model contains 110 million parameters and uses a 30,000-token vocabulary

- PubMedBERT achieved 1,000+ scholarly citations through 2024

- Microsoft Research renamed PubMedBERT to MSR BiomedBERT in November 2023

Microsoft Research owns PubMedBERT. The biomedical natural language processing model was developed by a nine-member research team at Microsoft and released in 2020.

PubMedBERT represents a specialized BERT-based language model pretrained exclusively on biomedical literature from the PubMed database. The model outperforms general-domain language models across clinical text analysis, named entity recognition, and medical document classification tasks. Microsoft made PubMedBERT available as an open-source model through the Hugging Face platform, allowing researchers and healthcare organizations worldwide to implement and fine-tune it for specific applications. More than 50 derivative models based on PubMedBERT now exist on Hugging Face, demonstrating its influence on biomedical AI research.

Who Owns PubMedBERT?

Microsoft Corporation owns PubMedBERT through its Microsoft Research division. The technology giant maintains full intellectual property rights to the model while distributing it under an open-source license.

Microsoft Research Ownership Structure

Microsoft Research operates as the fundamental research arm of Microsoft. The division developed PubMedBERT as part of its Health Futures initiative focused on advancing AI applications in precision medicine and healthcare.

The model falls under Microsoft’s broader biomedical AI portfolio, which includes the BLURB benchmark for evaluating biomedical NLP systems. Microsoft hosts PubMedBERT on its official GitHub repository and maintains the BLURB leaderboard tracking model performance across the research community.

Open-Source Distribution Model

Despite Microsoft’s ownership, PubMedBERT remains freely accessible for research and commercial applications. Organizations can download pretrained weights from Hugging Face and deploy the model without licensing fees. This distribution approach mirrors strategies employed by other major technology companies releasing foundation models.

Microsoft Health Futures Leadership

Microsoft Health Futures directs the ongoing development and maintenance of PubMedBERT. The division operates within Microsoft Research with a specific mandate to advance AI for precision health applications.

Hoifung Poon – General Manager

Hoifung Poon serves as General Manager of Microsoft Health Futures. He leads biomedical AI research and incubation efforts, overseeing PubMedBERT development and related initiatives. Poon joined Microsoft Research in 2011 and holds an affiliated professor position at the University of Washington Medical School. His research focuses on structuring medical data to accelerate discovery for precision health.

Jianfeng Gao – Distinguished Scientist

Jianfeng Gao contributed to PubMedBERT as a Distinguished Scientist at Microsoft Research in Redmond. His work spans machine learning and natural language processing, with particular emphasis on deep learning methods for text understanding.

Tristan Naumann – Principal Researcher

Tristan Naumann serves as Principal Researcher at Microsoft Research Health Futures. He contributed to both the original PubMedBERT paper and subsequent fine-tuning research published in 2023.

PubMedBERT Development History

Microsoft Research initiated PubMedBERT development to address limitations of general-domain language models when applied to biomedical text. The project challenged prevailing assumptions about mixed-domain pretraining.

2020 Initial Release

The research team published their foundational paper in July 2020. Training occurred on a single NVIDIA DGX-2 machine with 16 V100 GPUs over approximately five days. The team demonstrated that domain-specific pretraining from scratch outperformed continual pretraining approaches using general-domain models.

BLURB Benchmark Launch

Microsoft simultaneously released the Biomedical Language Understanding and Reasoning Benchmark. BLURB comprises 13 publicly available datasets spanning six NLP task categories. The benchmark established standardized evaluation criteria for biomedical language models.

2023 Rebranding to BiomedBERT

Microsoft renamed PubMedBERT to MSR BiomedBERT in November 2023. The rebranding reflected integration into Microsoft’s broader biomedical AI research portfolio while maintaining backward compatibility for existing implementations.

PubMedBERT NER Performance vs General BERT

NCBI Disease

BC5CDR-Chem

BC5CDR-Disease

BC2GM

F1-Score (%) — Source: Nature Communications, April 2025

Key Researchers Behind PubMedBERT

Nine Microsoft Research scientists authored the original PubMedBERT paper. Three researchers—Yu Gu, Robert Tinn, and Hao Cheng—contributed equally as lead authors according to the publication.

Lead Research Contributors

Yu Gu now serves as Principal Applied Scientist at Microsoft Research. Robert Tinn and Hao Cheng contributed engineering and algorithmic expertise during initial development. All three researchers continue working on biomedical NLP projects within data analytics and healthcare AI applications.

Supporting Research Team

Michael Lucas, Naoto Usuyama, and Xiaodong Liu provided additional research contributions. Usuyama continues active involvement in Microsoft’s biomedical AI initiatives, co-authoring subsequent papers on clinical trial matching and medical image analysis.

PubMedBERT Training Corpus Statistics

FAQ

Is PubMedBERT free to use?

Yes. Microsoft released PubMedBERT as an open-source model. Researchers and organizations can download and deploy it without licensing fees through Hugging Face.

What is the difference between PubMedBERT and BiomedBERT?

They are the same model. Microsoft renamed PubMedBERT to MSR BiomedBERT in November 2023 to align with its biomedical AI portfolio branding.

How does PubMedBERT compare to GPT-4 for medical tasks?

Fine-tuned PubMedBERT outperforms GPT-4 zero-shot by approximately 15% on extraction tasks. GPT-4 performs better on reasoning-intensive question answering.

What hardware trained PubMedBERT?

Microsoft trained PubMedBERT on one NVIDIA DGX-2 machine with 16 V100 GPUs. Training completed in approximately five days.

Can companies use PubMedBERT commercially?

Yes. The open-source license permits commercial deployment. Healthcare analytics companies and pharmaceutical firms actively use PubMedBERT for research applications.